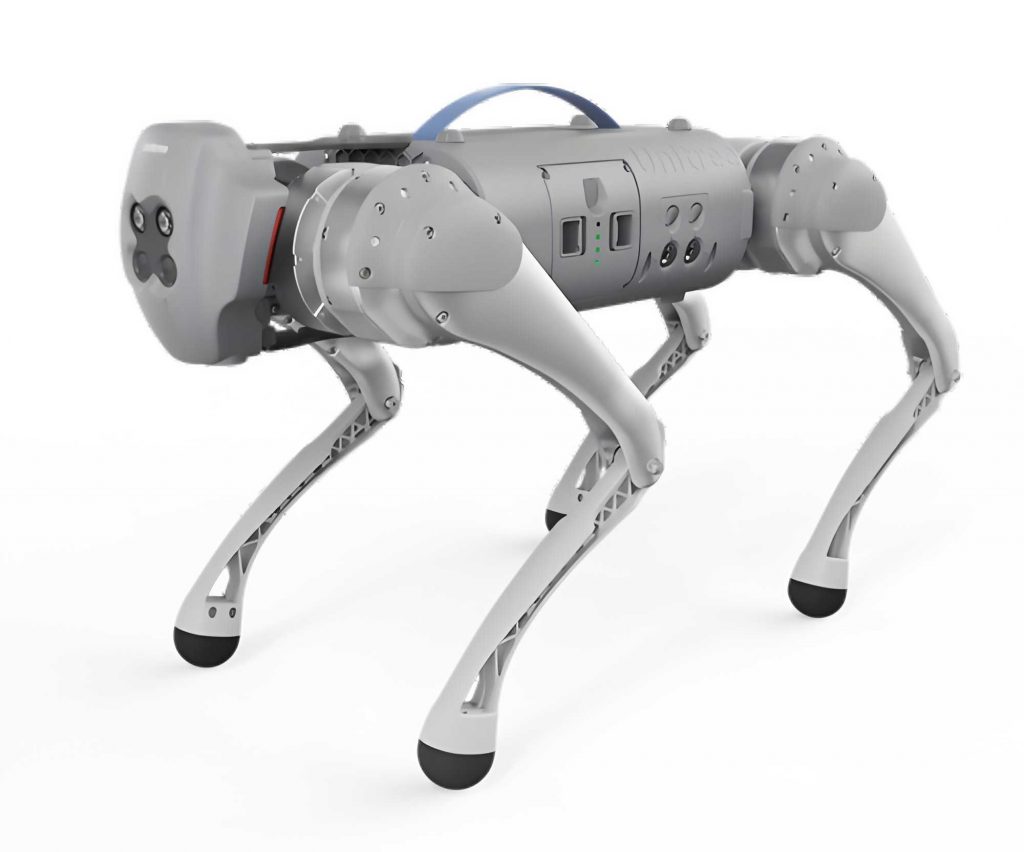

In recent years, quadruped robots, often referred to as robot dogs, have garnered significant attention in the field of bionic robotics due to their high adaptability to complex terrains. These robot dogs show promising applications in outdoor scenarios such as logistics transportation. However, when operating in dynamic and unpredictable environments, the joints of a quadruped robot continuously endure variable impact loads, leading to potential failures. Two common types of joint failures are locking faults, where the joint becomes rigidly fixed, and free-swinging faults, where the joint loses all actuation due to motor overload or physical damage. While locking faults may still allow the affected leg to provide some support, free-swinging faults render the leg incapable of supporting the robot, significantly compromising stability and mobility. To mitigate the adverse effects of joint failures, fault-tolerant gaits—adaptive locomotion strategies achieved through structural adjustments or motion modifications—are essential. These gaits enable a quadruped robot to maintain stable movement even after a joint failure, thereby enhancing its survival and adaptability in outdoor settings.

Existing research has primarily focused on fault-tolerant gaits for joint locking faults. For instance, methods involving additional counterweight mechanisms or kinematic model adjustments have been proposed to keep the robot’s center of mass (COM) within the support polygon formed by the functional legs. However, when a joint experiences a free-swinging failure, the affected leg cannot provide reliable support, and the robot’s dynamic model becomes highly uncertain. This uncertainty invalidates traditional model-based approaches, making it challenging to plan effective fault-tolerant gaits for such scenarios. To address this gap, we propose a novel fault-tolerant gait planning method based on the Twin Delayed Deep Deterministic Policy Gradient (TD3) algorithm, a reinforcement learning technique suited for continuous control problems. Our approach enables a quadruped robot to recover stable locomotion after a joint failure by adjusting the movements of the remaining functional legs through an interactive learning process.

The quadruped robot used in our study features a standard three-joint leg configuration, comprising abduction/adduction (side-swing), hip, and knee joints. When a joint, such as the knee joint in a leg, fails and enters a free-swinging state, it can no longer exert controlled forces, and the foot position becomes indeterminate. This indeterminacy disrupts the formation of a stable support region, making it difficult to apply conventional kinematic or dynamic models for gait planning. For example, if the knee joint in the right front leg fails, that leg loses its ability to support the robot or contribute to propulsion, leading to potential instability. The inherent uncertainties in the robot’s motion model under such conditions necessitate a model-free approach, which reinforcement learning provides by allowing the robot to learn optimal policies through trial and error.

Our method leverages the TD3 algorithm, which combines the Actor-Critic framework with deep deterministic policy gradients and clipped double Q-learning to address continuous action spaces efficiently. The TD3 algorithm mitigates the overestimation bias common in value-based reinforcement learning methods, leading to more stable and convergent training. The algorithm initializes three neural networks: two critic networks (Qθ1 and Qθ2) and one actor network (πφ), with parameters θ1, θ2, and φ, respectively. Target networks are initialized as copies of these networks: θ’1 ← θ1, θ’2 ← θ2, and φ’ ← φ. During training, the agent interacts with the environment by selecting actions based on the current state and noise: a = πφ(s) + ε, where ε ~ N(0, σ) represents Ornstein-Uhlenbeck noise to encourage exploration. Experiences (s, a, r, s’) are stored in a replay buffer, and mini-batches are sampled to update the networks. The target action for the next state is computed as a’ = πφ'(s’) + ε’, with ε’ clipped to [-c, c] to limit noise. The target Q-value is then derived using the minimum of the two target critics: y = r + γ min Qθ’i(s’, a’) for i=1,2, which helps reduce overestimation. The critic networks are updated by minimizing the loss: L(θi) = 𝔼[(Qθi(s, a) – y)^2], and the actor network is updated less frequently using the policy gradient: ∇φ J(φ) = 𝔼[∇a Qθ1(s, a) ∇φ πφ(s)]. Soft updates are applied to the target networks: θ’i ← τ θi + (1-τ) θ’i and φ’ ← τ φ + (1-τ) φ’, where τ is a small hyperparameter (e.g., 0.001) ensuring gradual updates.

The reward function is critical for guiding the learning process. We design a composite reward function that balances stability and forward progression for the quadruped robot. The total reward at each time step is given by: $$r_t = K_1 r_v + K_2 r_{yo} + K_3 r_{Fs} + K_4 r_{Km}$$ where:

– $r_v$ is the velocity reward term, encouraging the robot to maintain a target speed. It is computed as the negative squared error between the current velocity and the desired velocity.

– $r_{yo}$ is the y-axis offset penalty, penalizing deviations from straight-line movement. It is defined as $r_{yo} = y^2$, where y is the lateral deviation from the desired path.

– $r_{Fs}$ is the torso vibration penalty, reducing oscillations in the vertical direction for stability. It is calculated as $r_{Fs} = z^2$, where z is the vertical displacement from the nominal height.

– $r_{Km}$ is the movement incentive, encouraging continuous motion to avoid stagnation. It is expressed as $r_{Km} = T_s / T_f$, where $T_s$ is the sampling time and $T_f$ is the total simulation time.

The weights $K_1$, $K_2$, $K_3$, and $K_4$ are tuned through simulation to achieve optimal performance. For instance, after experimentation, we set $K_1 = 1$, $K_2 = -20$, $K_3 = -50$, and $K_4 = 25$ to emphasize stability and forward movement while penalizing excessive deviations.

To validate our approach, we conducted simulation experiments in MATLAB Simulink, where we modeled a quadruped robot with 12 degrees of freedom (three per leg). The simulation environment included the robot dynamics, and the TD3 algorithm was implemented to control the joint torques. We focused on a scenario where the knee joint of the right front leg experienced a free-swinging failure. The hyperparameters for the TD3 training are summarized in Table 1.

| Hyperparameter | Value |

|---|---|

| Actor Learning Rate | 0.0004 |

| Critic Learning Rate | 0.002 |

| Maximum Steps per Episode | 400 |

| Torque Limit | 25 Nm |

| Joint Control Range | -45° to 45° |

| Maximum Training Episodes | 20,000 |

| Soft Update Coefficient (τ) | 0.001 |

| Noise Clipping Range (c) | 0.5 |

During training, the robot learned to adapt its gait by interacting with the environment over 10-second episodes, with training stopping when the cumulative reward reached 400. The reward function curve, shown in Figure 1, demonstrates convergence, indicating that the robot successfully learned a stable fault-tolerant gait. The joint angular velocities were monitored during simulation. For the failed knee joint, the angular velocity remained near zero, confirming the failure state, while the other joints exhibited normal patterns. This adjustment allowed the robot to compensate for the failure using the remaining functional legs, particularly through the abduction/adduction and hip joints, which helped maintain balance and forward motion.

The robot’s body posture angles—roll, pitch, and heading—were also analyzed. Under knee joint failure, the heading angle showed significant variations to maintain balance, while roll and pitch angles remained relatively stable. This behavior is attributed to the specific location of the failed joint; different failure points may result in varying posture adjustments. The COM trajectory in the horizontal plane was plotted and compared to that of a robot without joint failure. Although some deviation occurred, the overall trajectory followed the desired path, with a maximum deviation of less than 8 mm in the X-direction and 5 mm in the Y-direction, confirming the effectiveness of the fault-tolerant gait.

We further validated our method through prototype experiments on a Spider-bot quadruped robot. During operation, we artificially induced a free-swinging failure in the right front knee joint at 0.2 seconds. The robot immediately switched to the learned fault-tolerant gait, as shown in Figure 2, where the leg segments maintained their positions while the robot continued moving. The COM trajectory from the experiments was consistent with the simulation results, with minor deviations due to real-world uncertainties. This consistency underscores the robustness of our TD3-based approach in real-world applications.

| Direction | Simulation Deviation (mm) | Prototype Deviation (mm) |

|---|---|---|

| X-axis | ≤ 8 | ≤ 8 |

| Y-axis | ≤ 5 | ≤ 6 |

In conclusion, our TD3-based fault-tolerant gait planning method enables quadruped robots to maintain stable locomotion after joint failures, enhancing their adaptability in complex environments. By leveraging reinforcement learning, the robot dog can online adjust its gait without relying on precise models, making it suitable for real-time applications. Future work will explore extensions to multiple joint failures and different terrains. The proposed approach represents a significant step forward in the reliability of quadruped robots, ensuring that these robot dogs can continue operating effectively even under adverse conditions.

The mathematical formulation of the TD3 algorithm can be extended to include the Bellman equation for value iteration: $$V^*(s) = \max_a \left[ R(s, a) + \gamma \sum_{s’} P(s’ | s, a) V^*(s’) \right]$$ where $V^*(s)$ is the optimal value function, $R(s, a)$ is the reward, $\gamma$ is the discount factor, and $P(s’ | s, a)$ is the transition probability. In our implementation, the TD3 algorithm approximates this through function approximation with neural networks, enabling efficient learning in high-dimensional spaces. This foundation allows the quadruped robot to autonomously develop fault-tolerant strategies, demonstrating the power of deep reinforcement learning in robotics.