In recent years, the integration of artificial intelligence into edge devices has revolutionized various fields, with quadruped robot dogs emerging as versatile platforms for applications such as surveillance, inspection, and navigation. As a researcher focused on computer vision and robotics, I have explored the critical role of data preprocessing in enhancing the performance of deep learning models for line-following tasks. The ability of a robot dog to accurately track paths or lines in dynamic environments relies heavily on the quality and diversity of training datasets. However, raw data collected from on-board sensors often suffer from issues like uneven lighting, shadows, and limited perspectives, which can degrade model accuracy. In this article, I delve into effective preprocessing techniques tailored for quadruped robot dog line-following systems, emphasizing methods to handle geometric transformations, illumination variations, and dataset augmentation. By leveraging mathematical formulations and empirical results, I aim to provide a comprehensive guide that bridges theoretical concepts and practical implementations, ensuring robust performance in real-world scenarios.

The core challenge in developing intelligent line-following systems for a robot dog lies in creating models that generalize well across diverse conditions. Deep learning approaches, particularly convolutional neural networks (CNNs), have shown promise in this domain, but their success hinges on high-quality input data. During my investigations, I observed that preprocessing steps such as rotation, flipping, and contrast enhancement significantly influence model training outcomes. For instance, inappropriate transformations can lead to misclassification of directional cues, causing the quadruped robot to deviate from its intended path. Moreover, hardware constraints of edge devices, like limited computational power and power supply, necessitate efficient preprocessing methods that balance performance and resource usage. Through systematic experimentation, I have identified a suite of techniques that address these challenges, which I will elaborate on in subsequent sections.

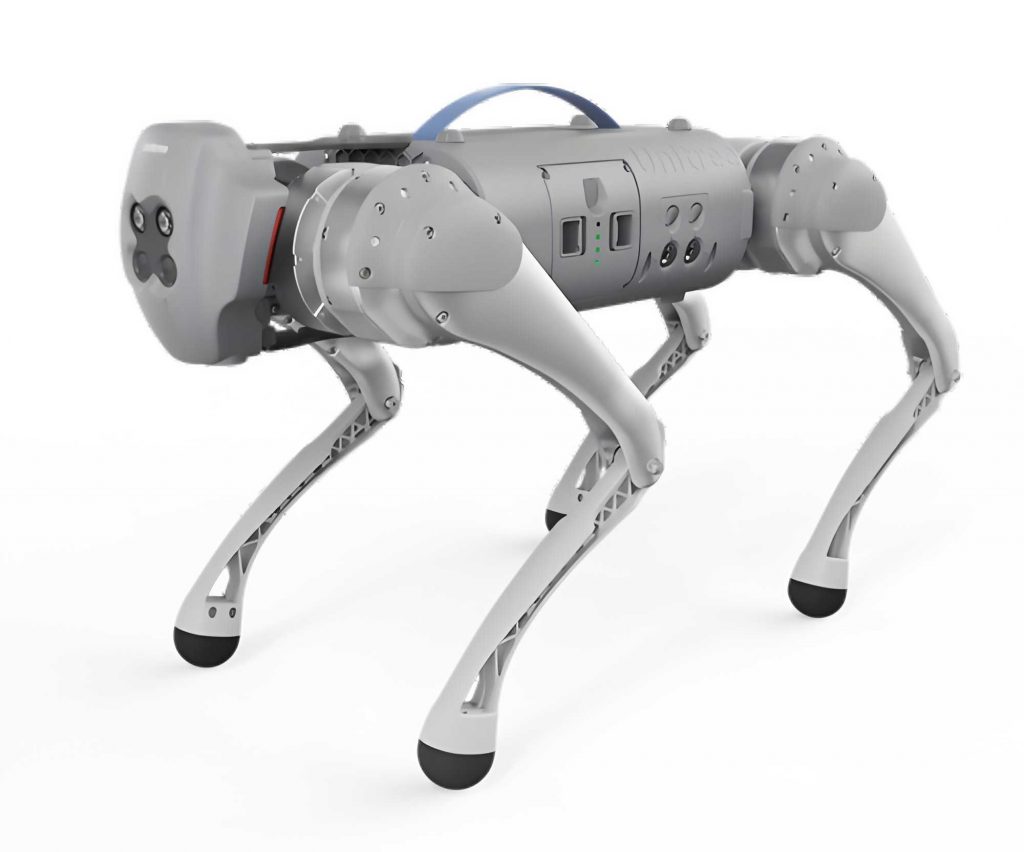

To contextualize this work, it is essential to understand the dataset used in my experiments. The data were collected using a commercial quadruped robot dog equipped with an abdominal camera, navigating a custom-designed map simulating real-world line-following scenarios. This map included straight paths, curves, intersections, and obstacles, with the line color varying to mimic environmental diversity. The robot dog was manually operated to traverse the paths multiple times, capturing images at a frame rate of 10 frames per second. Each image was labeled into categories based on the required action: left deviation, right deviation, left turn, right turn, straight movement, and other scenarios involving external sensors. The raw images, sized 232×200 pixels in RGB format, exhibited common issues such as occlusions from the robot’s limbs, shadows, and varying brightness levels. These imperfections underscore the necessity of preprocessing to ensure the dataset’s suitability for training deep learning models.

The preprocessing pipeline I developed comprises multiple method sets, each targeting specific data quality issues. Let me begin with the handling of lighting and shadows, which are prevalent in outdoor deployments. Shadows can obscure the line features, leading to erroneous detections by the model. Traditional approaches involve color space transformations, such as converting RGB images to HSV or Lab spaces, to separate luminance and chrominance components. For a pixel in an RGB image, the conversion to HSV can be represented as:

$$ H = \begin{cases}

0^\circ & \text{if } \max = \min \\

60^\circ \times \left( \frac{G – B}{\max – \min} \right) \mod 360 & \text{if } \max = R \\

60^\circ \times \left( \frac{B – R}{\max – \min} + 2 \right) & \text{if } \max = G \\

60^\circ \times \left( \frac{R – G}{\max – \min} + 4 \right) & \text{if } \max = B

\end{cases} $$

$$ S = \begin{cases}

0 & \text{if } \max = 0 \\

\frac{\max – \min}{\max} & \text{otherwise}

\end{cases} $$

$$ V = \max(R, G, B) $$

where \( R, G, B \) are the red, green, and blue channel values, and \( \max \) and \( \min \) are the maximum and minimum of these values. However, I found that these methods often fall short in preserving line clarity. Instead, I adopted a mean-based approach that calculates the average intensity of pixels in the whitish-gray regions (thresholded between 30-60) and adjusts them to match the background. This is formulated as:

$$ I_{\text{corrected}}(x,y) = \begin{cases}

\mu & \text{if } I_{\text{original}}(x,y) > T \\

I_{\text{original}}(x,y) & \text{otherwise}

\end{cases} $$

where \( I_{\text{original}} \) is the input image, \( T \) is the threshold (set to 45 in my case), and \( \mu \) is the mean intensity of pixels above \( T \). This method effectively reduces shadows but may introduce noise, which I address using morphological operations like opening and closing with a 5×5 kernel. The opening operation, defined as erosion followed by dilation, helps remove small noise particles:

$$ A \circ B = (A \ominus B) \oplus B $$

where \( A \) is the image, \( B \) is the structuring element, \( \ominus \) denotes erosion, and \( \oplus \) denotes dilation. Similarly, closing (dilation followed by erosion) fills gaps: \( A \bullet B = (A \oplus B) \ominus B \). These steps collectively enhance image quality by mitigating shadow effects while maintaining line integrity.

Another critical aspect is geometric transformations, such as rotation and flipping, which augment the dataset to cover various orientations. However, for a quadruped robot dog, certain transformations must be applied judiciously to avoid confusing the model. For example, horizontal flipping of images labeled as left or right deviation can produce samples that resemble the opposite category, leading to misclassification during inference. Mathematically, a horizontal flip transforms a pixel at position \( (x, y) \) to \( (w – x, y) \), where \( w \) is the image width. To prevent this, I exclude horizontal flipping for deviation categories but allow rotations up to 45 degrees to simulate minor orientation changes. The rotation transformation can be expressed using affine matrices:

$$ \begin{bmatrix} x’ \\ y’ \\ 1 \end{bmatrix} = \begin{bmatrix} \cos \theta & -\sin \theta & t_x \\ \sin \theta & \cos \theta & t_y \\ 0 & 0 & 1 \end{bmatrix} \begin{bmatrix} x \\ y \\ 1 \end{bmatrix} $$

where \( \theta \) is the rotation angle (e.g., 15° or 30°), and \( t_x, t_y \) are translation offsets to keep the image centered. This approach enriches the dataset without compromising label consistency, ensuring the robot dog can handle a range of path deviations.

Contrast enhancement is vital for improving visibility in low-light conditions, which are common in indoor or shaded environments. Given the hardware limitations of a robot dog’s camera, I employed localized histogram equalization on individual RGB channels to boost contrast. For a channel \( C \), the equalized histogram is computed as:

$$ C_{\text{eq}}(u) = \frac{1}{M \times N} \sum_{i=0}^{u} h_C(i) $$

where \( h_C \) is the histogram of channel \( C \), \( M \times N \) is the image dimensions, and \( u \) is the intensity level. This method redistributes pixel intensities to span the full dynamic range, enhancing line prominence. Additionally, I experimented with sharpening filters, such as the Laplacian kernel, to accentuate edges:

$$ \nabla^2 I = \frac{\partial^2 I}{\partial x^2} + \frac{\partial^2 I}{\partial y^2} $$

which can be approximated using convolutional kernels like \( \begin{bmatrix} 0 & -1 & 0 \\ -1 & 4 & -1 \\ 0 & -1 & 0 \end{bmatrix} \). These techniques collectively address the blurriness and low contrast often encountered in raw images from the quadruped robot dog.

To systematize the preprocessing, I organized the methods into distinct sets applied sequentially. Let \( T_1 \) denote the geometric transformation set, including rotation by 15° and 30°, and resizing to a uniform dimension. For an input image \( \text{img} \), this produces an augmented set \( I_1 = \{ \text{img}, \text{img}_{15}, \text{img}_{30} \} \). Next, \( T_2 \) encompasses contrast enhancement, blurring, and sharpening, applied to each image in \( I_1 \) to generate \( I_2 \). Similarly, \( T_3 \) handles shadow removal and morphological operations, replacing original images with processed versions to form \( I_3 \). A base set \( T_4 \) includes grayscale conversion and RGB adjustments, used adjunctively. The overall pipeline can be summarized as:

$$ I_{\text{final}} = T_3(T_2(T_1(\text{img}))) $$

This structured approach ensures comprehensive data augmentation while preserving semantic labels, crucial for training accurate deep learning models for the robot dog.

In my experiments, I evaluated the impact of these preprocessing techniques on a ResNet-50 model trained for multi-class classification. The dataset initially contained 1,112 raw images, which were expanded to 12,167 samples after augmentation. The model was configured with the following hyperparameters:

| Parameter | Value |

|---|---|

| Number of Classes | 6 |

| Total Images (Raw) | 1,112 |

| Epochs | 100 |

| Batch Size | 32 |

| Image Shape | [3, 232, 200] |

Training and validation were conducted on splits of the dataset, with results indicating a significant improvement in model performance after preprocessing. The loss function, categorical cross-entropy, is defined as:

$$ L = -\frac{1}{N} \sum_{i=1}^{N} \sum_{c=1}^{C} y_{i,c} \log(\hat{y}_{i,c}) $$

where \( N \) is the number of samples, \( C \) is the number of classes, \( y_{i,c} \) is the true label, and \( \hat{y}_{i,c} \) is the predicted probability. Without preprocessing, the training loss converged to 0.145, with top-1 accuracy of 95% and top-5 accuracy of 99.99% on the training set. On the validation set, the loss was 0.55, with top-1 accuracy of 85% and top-5 accuracy of 99.9%. Testing on 50 unseen images yielded an accuracy of 80%, as shown below:

| Sample Type | Number of Samples | Prediction Errors |

|---|---|---|

| Right Deviation | 6 | 3 |

| Straight | 10 | 2 |

| Left Deviation | 10 | 4 |

| Right Turn | 10 | 0 |

| Left Turn | 7 | 1 |

| Other | 7 | 0 |

After applying the preprocessing pipeline, the model achieved a training loss of 0.05, with top-1 accuracy of 96.7% and top-5 accuracy of 99.99%. Validation loss improved to 0.49, with top-1 accuracy of 88.7% and top-5 accuracy of 99.9%. On the same test set, accuracy rose to 92%, with only 4 errors:

| Sample Type | Number of Samples | Prediction Errors |

|---|---|---|

| Right Deviation | 6 | 1 |

| Straight | 10 | 1 |

| Left Deviation | 10 | 1 |

| Right Turn | 10 | 0 |

| Left Turn | 7 | 1 |

| Other | 7 | 0 |

These results underscore the efficacy of the preprocessing methods in enhancing model robustness for the quadruped robot dog. Deployment on the actual hardware confirmed that the preprocessed data led to smoother and more reliable line-following behaviors, with reduced instances of path deviation.

In conclusion, the preprocessing techniques I have detailed—addressing shadows, geometric transformations, contrast issues, and dataset augmentation—play a pivotal role in optimizing deep learning models for quadruped robot dog line following. By carefully tailoring these methods to the constraints of edge devices and the specifics of the application, I achieved notable improvements in model accuracy and real-world performance. Future work could explore adaptive preprocessing that dynamically adjusts to environmental changes, further advancing the autonomy of robot dogs in complex scenarios. The integration of these approaches not only benefits line-following tasks but also sets a precedent for other vision-based applications in robotics, emphasizing the importance of high-quality data in AI-driven systems.