In the field of equipment maintenance, remanufacturing, and end-of-life product recycling, disassembly plays a critical role in recovering valuable components, reducing waste, and conserving resources. Traditional disassembly methods often rely on sequential operations, which can be time-consuming and inefficient. With advancements in robot technology, parallel disassembly using multiple robots has emerged as a promising approach to enhance efficiency and reduce energy consumption. This article explores a robotic parallel disassembly sequence planning (RP-DSP) model that minimizes both completion time and energy consumption, and introduces a novel genetic algorithm enhanced by reinforcement learning to solve this complex multi-objective optimization problem. The integration of robot technology allows for simultaneous disassembly tasks, leveraging the flexibility and precision of robotic systems to handle intricate product structures. By incorporating reinforcement learning, the algorithm dynamically adapts its strategies during iteration, leading to superior performance in finding near-optimal solutions. Throughout this discussion, the term “robot technology” will be emphasized to highlight its pivotal role in modern disassembly processes.

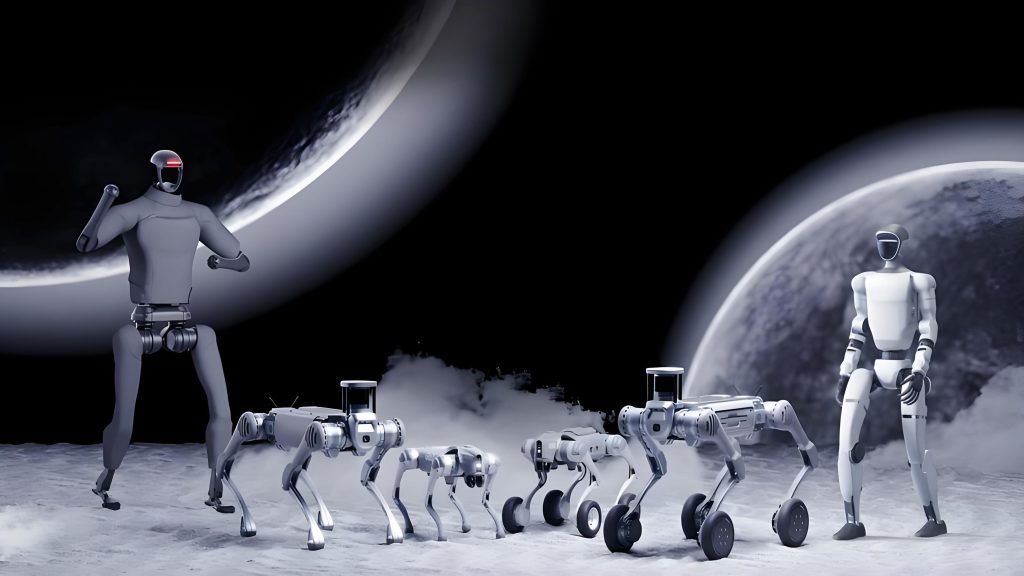

The RP-DSP problem involves assigning disassembly tasks to multiple robots operating in parallel, considering constraints such as precedence relationships, tool changes, and varying robot capabilities. Each robot may have different power consumption rates and disassembly times for specific tasks, making the optimization challenging. The primary objectives are to minimize the maximum completion time across all robots and the total energy consumed, which includes operational energy, tool change energy, and standby energy. This problem is particularly relevant in industries where sustainability and efficiency are paramount, such as automotive and electronics recycling. Robot technology enables the deployment of multiple robots working concurrently on different parts of a product, as illustrated in the following visual representation of a parallel disassembly setup.

To formalize the RP-DSP problem, a mixed-integer linear programming (MILP) model is constructed. Let $I$ denote the set of disassembly tasks, where $i, j \in I$ represent individual tasks, and $M$ represent the set of robots, with $m \in M$. The disassembly time for task $i$ on robot $m$ is given by $t_{im}$, and $o_i$ indicates the tool type required for task $i$, with $O$ being the total number of tool types. The tool change time is denoted as $t_b$, and precedence relationships are defined by $p_{ij}$, where $p_{ij} = 1$ if task $i$ must precede task $j$. Energy parameters include $e_{d_m}$ for operational energy per unit time, $e_{c_m}$ for tool change energy, and $e_{s_m}$ for standby energy, all specific to robot $m$. Decision variables include $x_{im}$ (1 if task $i$ is assigned to robot $m$, 0 otherwise), $y_m$ (1 if robot $m$ is enabled), $z_{imk}$ (1 if task $i$ is at position $k$ on robot $m$), $a_{im}$ (1 if task $i$ is the last task on robot $m$), $b_m$ (1 if the first and last tasks on robot $m$ require different tools), $d_{mk}$ (1 if tasks at positions $k$ and $k+1$ on robot $m$ require different tools), $t_{f_i}$ (completion time of task $i$), and $T_{s_m}$ (start time of robot $m$). The overall completion time $TC$ is the maximum among all robots’ completion times.

The multi-objective function aims to minimize both the completion time and energy consumption. Mathematically, it is expressed as:

$$ \min F = (f_1, f_2) $$

where $f_1 = TC$ represents the maximum completion time, and $f_2$ is the total energy consumption, calculated as:

$$ f_2 = \sum_{m \in M} \sum_{i \in I} e_{d_m} x_{im} t_{im} + \sum_{m \in M} e_{c_m} t_b \left( \sum_{k \in K’} d_{mk} + b_m \right) + \sum_{m \in M} e_{s_m} \left( TC – \sum_{i \in I} x_{im} t_{im} \right) $$

Here, $K’$ denotes the set of positions excluding the last one. The constraints ensure feasible disassembly sequences, including complete disassembly of all tasks, precedence relationships, and tool change considerations. For instance, the constraint for task completion times considering precedence is:

$$ t_{f_j} – t_{f_i} + \psi \left( 3 – \sum_{m \in M} x_{im} – \sum_{m \in M} x_{jm} – p_{ij} \right) \geq \sum_{m \in M} x_{jm} t_{jm} \quad \forall i, j \in I, i \neq j $$

where $\psi$ is a large number. Tool change constraints between adjacent tasks on a robot are modeled as:

$$ \sum_{i \in I} o_i z_{imk} – \sum_{j \in I} o_j z_{jm,k+1} \leq O \cdot d_{mk} + O \cdot \left( 2 – \sum_{i \in I} z_{imk} – \sum_{j \in I} z_{jm,k+1} \right) $$

and similarly for the reverse difference. These constraints ensure that the disassembly process adheres to practical limitations, leveraging robot technology to handle complex interactions.

To solve the RP-DSP problem efficiently, a genetic algorithm based on reinforcement learning (MOGGA-QL) is proposed. This algorithm combines the global search capabilities of genetic algorithms with the adaptive decision-making of reinforcement learning, specifically Q-learning. The algorithm begins with an initialization phase that uses heuristic rules to generate high-quality initial solutions. For example, tasks with precedence constraints are prioritized, and robots with lower power consumption are assigned more tasks to reduce energy usage. The encoding strategy represents disassembly sequences and robot assignments as integer strings, while the decoding strategy determines task time windows by considering precedence and tool changes to minimize idle time.

The genetic algorithm employs selection, crossover, and mutation operators. The selection probability for a chromosome $x_i$ is based on its hypervolume value $h(x_i)$, calculated as:

$$ P(x_i) = 0.1 + 0.9 \frac{h(x_i)}{\sum_{i=1}^N h(x_i)} $$

where $h(x_i) = \left( \frac{r_1^* – f_1(x_i)}{r_1^*} \right) \times \left( \frac{r_2^* – f_2(x_i)}{r_2^*} \right)$, with $r^*$ being a reference point. Five crossover strategies are used: single-point, double-point inner, double-point outer, multi-point, and random crossover, all designed to maintain feasibility under precedence constraints. Two mutation strategies, exchange and insertion mutation, ensure that mutated sequences remain valid by swapping or inserting tasks within their feasible ranges.

Reinforcement learning is integrated through Q-learning to dynamically select the best crossover and mutation strategies during iteration. The state $s^*$ of the algorithm is evaluated based on the average fitness $f^*$, diversity $d^*$, and best fitness $m^*$ of the population:

$$ s^* = w_1 f^* + w_2 d^* + w_3 m^* $$

with weights $w_1 = 0.35$, $w_2 = 0.35$, and $w_3 = 0.3$. The state space is divided into 20 intervals, and the action space includes 10 combinations of crossover and mutation strategies. The reward $r$ is the increment in population hypervolume:

$$ r = \sum_{i=1}^N h(x_i^g) – \sum_{i=1}^N h(x_i^{g-1}) $$

The Q-table is updated using the formula:

$$ Q(s, a) = (1 – \alpha) Q(s, a) + \alpha \left[ r + \gamma \max_{a’} Q(s’, a’) \right] $$

where $\alpha = 0.1$ is the learning rate and $\gamma = 0.4$ is the discount factor. This approach enhances the algorithm’s adaptability, leading to better convergence and diversity in solutions.

To validate the model and algorithm, a case study involving the disassembly of an engine with 34 tasks is conducted. The precedence relationships of the tasks are complex, and five types of robots with different power consumptions are used. The tool change time is set to 3 seconds, and energy parameters are defined accordingly. The MOGGA-QL algorithm is compared with four classic multi-objective algorithms: NSGA-II, SPEA2, MOPSO, and MOABC. Each algorithm is run independently 20 times with a population size of 100 and a maximum runtime of 300 seconds. Performance is evaluated using hypervolume (HV), inverted generational distance (IGD), and spread metrics.

The results demonstrate that MOGGA-QL outperforms the other algorithms in terms of HV and IGD, with narrower confidence intervals, indicating better convergence and diversity. For instance, the best HV values achieved are 0.7334 for NSGA-II, 0.8088 for SPEA2, 0.8182 for MOPSO, 0.8246 for MOABC, and 0.8527 for MOGGA-QL. The Pareto front obtained by MOGGA-QL is closer to the true Pareto front, providing a range of solutions that balance completion time and energy consumption. Two representative solutions are analyzed: Solution A minimizes completion time (593 seconds) with higher energy consumption (1599.29 kW·s), while Solution B minimizes energy consumption (1270.65 kW·s) with a longer completion time (832 seconds). The Gantt charts for these solutions reveal that Solution A uses all five robots with multiple tool changes, whereas Solution B uses only two robots with lower power consumption, reducing energy usage but increasing time due to imbalanced task allocation.

The following table summarizes the key parameters used in the mathematical model and algorithm for quick reference:

| Symbol | Description |

|---|---|

| $I$ | Set of disassembly tasks |

| $M$ | Set of robots |

| $t_{im}$ | Disassembly time of task $i$ on robot $m$ |

| $o_i$ | Tool type for task $i$ |

| $t_b$ | Tool change time |

| $p_{ij}$ | Precedence relation (1 if $i$ precedes $j$) |

| $e_{d_m}$ | Operational energy per unit time for robot $m$ |

| $e_{c_m}$ | Tool change energy per unit time for robot $m$ |

| $e_{s_m}$ | Standby energy per unit time for robot $m$ |

| $x_{im}$ | Binary variable for task assignment |

| $TC$ | Maximum completion time |

Another table outlines the algorithm parameters for MOGGA-QL:

| Parameter | Value |

|---|---|

| Population size | 100 |

| Maximum iterations | 300 |

| Crossover probability | 0.8 |

| Mutation probability | 0.2 |

| Learning rate ($\alpha$) | 0.1 |

| Discount factor ($\gamma$) | 0.4 |

The integration of robot technology in disassembly processes not only improves efficiency but also supports sustainable practices by reducing energy consumption. The MOGGA-QL algorithm effectively handles the complexities of RP-DSP, demonstrating its superiority through empirical results. Future research could explore the application of deep reinforcement learning to further enhance adaptability in dynamic environments, such as real-time disassembly with uncertain conditions. As robot technology continues to evolve, its role in disassembly sequence planning will become increasingly vital, driving innovations in automation and resource recovery.

In conclusion, this article presents a comprehensive approach to robotic parallel disassembly sequence planning, combining mathematical modeling with an advanced genetic algorithm reinforced by Q-learning. The proposed method addresses key challenges in minimizing completion time and energy consumption, showcasing the transformative potential of robot technology in industrial applications. By leveraging adaptive algorithms, manufacturers can achieve higher efficiency and sustainability, paving the way for smarter and greener disassembly systems.