With the rapid advancement of industrial automation and intelligence, the demand for inspection robots in complex and dynamic environments has grown significantly. Traditional inspection robot technology often struggles with poor adaptability, low execution efficiency, and difficulties in collaborative operations when faced with changing conditions. These challenges highlight the need for breakthroughs through cutting-edge technologies. In this study, we propose an intelligent inspection robot system framework driven by embodied large models, aiming to enhance the intelligence and application efficiency of robot technology through multimodal data fusion, edge computing, and cloud computing collaboration. Our research focuses on developing a system that integrates real-time detection algorithms, local and cloud model synergy, and multi-robot coordination to address the limitations of existing approaches. By leveraging advanced robot technology, we aim to improve inspection accuracy, reduce latency, and ensure robust performance in various scenarios, such as aviation maintenance and industrial settings.

The core of our research revolves around several key areas: real-time detection algorithms, local and cloud collaborative work, system integration methods, and heterogeneous multi-robot collaboration. We begin by exploring real-time detection algorithms, which involve multimodal data fusion, rapid feature extraction, and anomaly detection. For instance, multimodal data fusion utilizes an adaptive spatial feature fusion algorithm based on the YOLOv5x network framework, combined with lightweight MobileNet for feature extraction. This approach optimizes network depth to enhance processing speed, as shown in the formula for feature fusion: $$ F_{fused} = \sum_{i=1}^{n} w_i \cdot F_i $$ where \( F_i \) represents features from different modalities, and \( w_i \) denotes adaptive weights. This enables the robot technology to generate precise environmental models efficiently. Rapid feature extraction employs compressed lightweight models, such as those using depthwise separable convolutions, to reduce computational load: $$ \text{Output} = \text{DepthwiseConv}(X) + \text{PointwiseConv}(X) $$ where \( X \) is the input data. Anomaly detection in local models uses threshold-based rules, such as identifying cracks exceeding 2mm or corrosion over 5%, to ensure quick responses.

Next, we delve into local and cloud collaborative work, which encompasses hierarchical processing and optimized communication protocols. Hierarchical processing allows local models to handle real-time tasks, while complex analyses are offloaded to the cloud. This division is crucial for maintaining low latency in robot technology applications. Optimized communication protocols involve data compression and encryption to enhance transmission efficiency and security. For example, we use algorithms like AES for encryption to protect sensitive data, ensuring that robot technology operations remain reliable even in unstable network conditions.

System integration methods are another critical aspect, involving architecture design, data synchronization, and model management. We adopt a layered architecture with edge and cloud layers, facilitating modular design for better maintainability. Data synchronization employs timestamp-based mechanisms to ensure consistency, represented as: $$ t_{sync} = \max(t_1, t_2, \dots, t_n) $$ where \( t_i \) are timestamps from various sensors. Model management includes dynamic switching between local and cloud models based on network conditions, enhancing the resilience of robot technology systems.

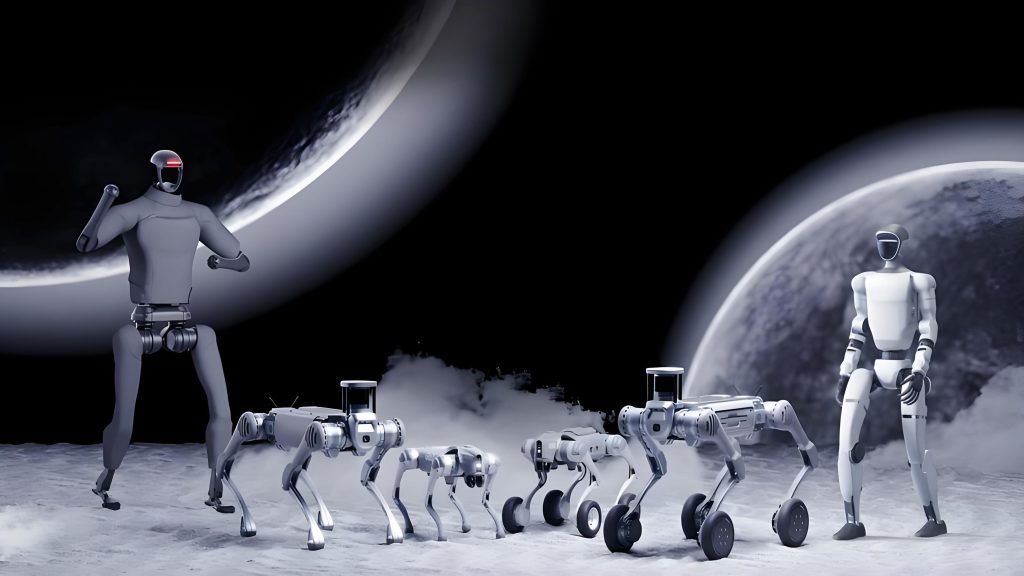

Heterogeneous multi-robot collaboration focuses on multi-robot cooperative planning, communication mechanisms, perception, decision-making, control, and fault handling. We develop dynamic task allocation algorithms that optimize resource utilization, such as using Hungarian algorithm for assignment: $$ \min \sum_{i=1}^{m} \sum_{j=1}^{n} c_{ij} x_{ij} $$ where \( c_{ij} \) is the cost of assigning robot \( i \) to task \( j \), and \( x_{ij} \) is a binary variable. Cooperative communication relies on message queues to reduce latency, while distributed perception integrates data from multiple sensors for global awareness. Fault handling involves automated task reallocation to ensure continuity, a vital feature for robust robot technology applications.

In terms of local and cloud model empowerment, local lightweight models excel in real-time multimodal data processing, low-latency responses, and bandwidth conservation. For example, they perform preliminary analyses and filter data to reduce uploads, which is essential for efficient robot technology operations. Cloud large models, on the other hand, leverage high-performance computing for advanced image recognition, detailed data analysis, and elastic resource scaling. This synergy allows the robot technology system to handle complex tasks, such as detecting subtle defects in aircraft skins, with high accuracy.

The workflow of our intelligent inspection robot system begins with initialization, where robots activate sensors and load local models. As they follow predefined paths, they continuously collect and process data, identifying anomalies locally. Marked data is then uploaded to the cloud for in-depth analysis, and results are fed back to generate reports and alerts. This seamless process ensures that robot technology can operate efficiently in real-time, with all data stored in the cloud for future optimization.

Application scenarios demonstrate the practicality of our framework, particularly in aviation settings like aircraft aprons and maintenance hangars. We equipped robots with high-resolution RGB cameras, ultrasonic flaw detectors, infrared thermal imagers, and LiDAR to perform detailed inspections of aircraft skins. Through multimodal data fusion, the system accurately identifies cracks, corrosion, and deformations, adapting to material properties and environmental changes. For instance, the damage model incorporates material-specific thresholds, enhancing the precision of robot technology. In experimental validations involving 25 aircraft, our system detected 16 cracks, 9 corrosion spots, and 5 structural deformations, achieving a 60% improvement in efficiency and a 32% increase in accuracy compared to manual methods. Local anomaly detection latency was under 500 milliseconds, and cloud verification responses averaged 6 seconds, showcasing the effectiveness of our robot technology approach.

To quantify the results, we present a table summarizing key performance metrics:

| Metric | Value | Improvement Over Traditional Methods |

|---|---|---|

| Detection Efficiency | 60% faster | Based on time per inspection |

| Accuracy | 32% higher | Reduction in false negatives |

| Local Latency | <500 ms | For initial anomaly detection |

| Cloud Response Time | ~6 seconds | For detailed analysis |

| False Alarm Rate | 5% reduction | Through multi-round validation |

Additionally, we use formulas to illustrate the anomaly detection process. For example, the probability of an anomaly can be modeled as: $$ P(\text{anomaly}) = \sigma(\mathbf{w}^T \mathbf{x} + b) $$ where \( \sigma \) is the sigmoid function, \( \mathbf{w} \) are weights, \( \mathbf{x} \) is the feature vector, and \( b \) is the bias. This integrates with the robot technology to enable adaptive thresholding based on environmental factors.

In conclusion, our research presents a novel framework that leverages embodied large models and multi-robot collaboration to advance intelligent inspection robot technology. By addressing key challenges in perception, decision-making, and execution, we have demonstrated significant improvements in efficiency, safety, and cost reduction. The integration of multimodal data fusion, cloud-edge synergy, and collaborative algorithms positions this robot technology as a cornerstone for future industrial automation. We believe that this work not only contributes to the theoretical foundation of robot technology but also offers practical solutions for real-world applications, paving the way for smarter and more adaptive robotic systems.