In recent years, legged robots, particularly quadruped robots or robot dogs, have garnered significant attention due to their agility and superior terrain adaptability. As sensing technologies, motion control, and intelligent decision-making advance, these robots are increasingly deployed in applications such as mall greetings and factory inspections. Navigation is fundamental to enabling autonomous mobile tasks, with path planning being a critical component. Traditional path planning methods for mobile robots often rely on pre-built maps and modular systems involving perception, mapping, and path search. However, these approaches face challenges in unknown environments due to the absence of prior maps, cumulative errors, and slow response times. To address these limitations, we propose an end-to-end imperative learning-based path planning system for quadruped robots, which simplifies the traditional “mapping-localization-navigation” pipeline into a “perception-planning” framework. This system allows a robot dog to navigate autonomously to target points in unmapped environments without explicit map construction, leveraging real-time sensor data and a trained network to generate collision-free paths.

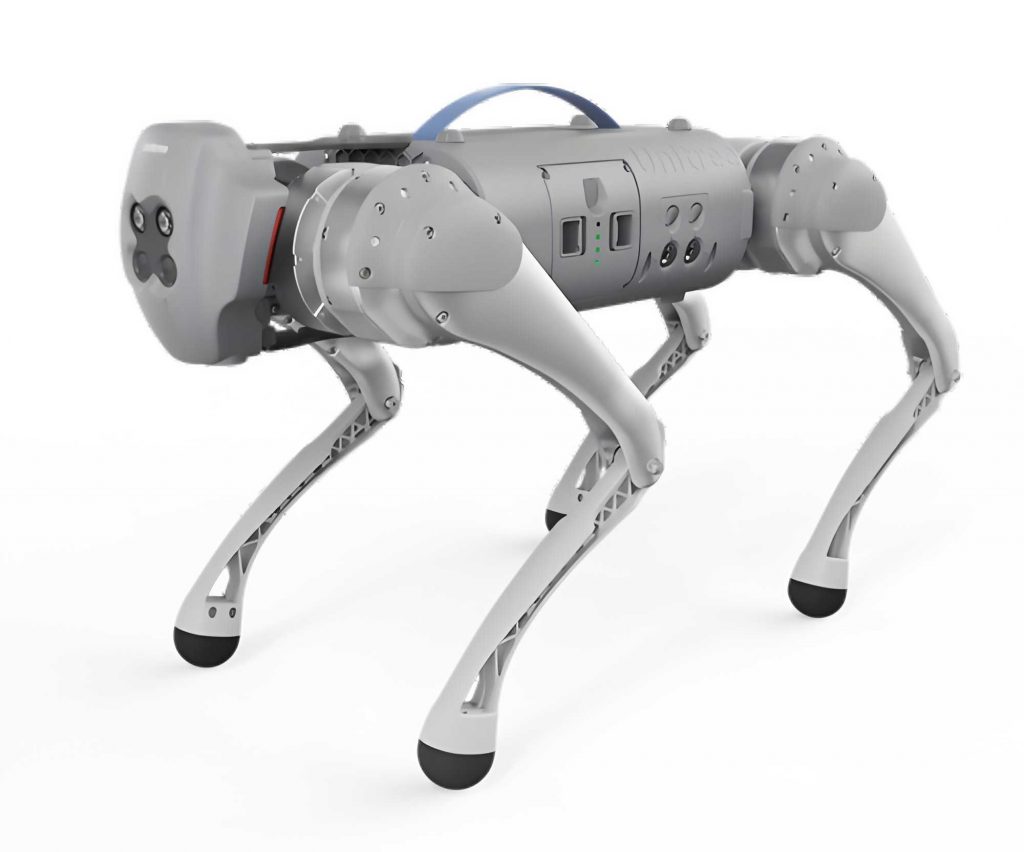

Our experimental system is built on a self-developed SDUQuad quadruped robot platform, integrating a VLP-16 LiDAR, Intel Realsense D435i depth camera, and an Nvidia Jetson Xavier AGX controller as the navigation core. The hardware setup ensures low-latency communication between the navigation system and the robot’s motion controller via a network switch. The software framework is based on ROS (Robot Operating System), incorporating localization through the LOAM algorithm, path planning via an imperative learning network, trajectory tracking with an improved Pure Pursuit algorithm, and command transmission using LCM (Lightweight Communications and Marshalling). This design facilitates real-time autonomous navigation in dynamic and unknown environments.

The core of our approach is the imperative path planning network, which employs an end-to-end architecture to directly output navigation paths from depth images and target coordinates. This method eliminates the need for expert-labeled data by using a differentiable cost-based optimization to guide network updates. The network consists of a ResNet-18-based perception frontend for feature extraction from depth images, a linear layer for processing target points, and a planning network that predicts keypoint paths. Training involves minimizing a loss function derived from Euclidean Signed Distance Field (ESDF) costs and task-specific penalties, enabling efficient unsupervised learning. Experiments in both simulated and physical environments demonstrate that the quadruped robot can achieve high success rates in static and dynamic scenarios, outperforming traditional methods like A* in adaptive path planning.

System Architecture and Components

The hardware system for the quadruped robot navigation experiment is centered on the SDUQuad-48 physical platform, a custom-designed robot dog with robust locomotion capabilities. Key sensors include a Velodyne VLP-16 LiDAR for precise point cloud data and an Intel Realsense D435i depth camera for capturing environmental depth information. The Nvidia Jetson Xavier AGX serves as the navigation controller, offering high computational power with its 8-core CPU and 512 CUDA cores, capable of up to 32 TOPS for edge computing. This controller processes sensor data and executes the path planning algorithms in real-time. Communication between the navigation system and the robot’s low-level motion controller is handled through a network switch, ensuring reliable and low-latency data transfer. Additionally, a remote controller is integrated for safety and manual override, enhancing system controllability.

On the software side, the system is built on ROS, utilizing a modular and loosely coupled architecture for flexibility. The localization module employs the LOAM algorithm, which processes LiDAR point clouds to estimate the robot’s pose by matching features between consecutive frames. For a point $i$ in a point cloud frame, the smoothness $c$ is computed as:

$$c = \frac{1}{|S|} \left\| \sum_{j \in S, j \neq i} (X_{(k,i)} – X_{(k,j)}) \right\|$$

where $S$ represents the set of points around $i$, and $X$ denotes the point coordinates. This helps identify edge and planar points for accurate motion estimation. The path planning module is implemented using the imperative learning network, Iplanner, which takes preprocessed depth images and target coordinates as input. The trajectory tracking module uses an enhanced Pure Pursuit algorithm to follow the planned path, and velocity commands are sent to the quadruped robot via LCM after filtering and interpolation to account for real-world constraints like acceleration limits.

Imperative Path Planning Methodology

The imperative path planning approach revolutionizes autonomous navigation for quadruped robots by replacing traditional multi-stage processes with an end-to-end learning framework. The network input consists of depth images from the Realsense camera and target points in the robot’s coordinate frame. Depth images undergo preprocessing steps including median filtering to reduce noise, scaling to normalize pixel values, and truncation to focus on relevant depth ranges. For example, if the depth range is set to 0–10 meters, pixels beyond this are discarded to balance information complexity and sufficiency.

The network architecture comprises a perception layer, a planning layer, loss generation, and backpropagation. The perception frontend uses a ResNet-18 convolutional neural network to extract features from depth images. ResNet-18 incorporates residual blocks with skip connections, mitigating gradient vanishing in deep networks. A residual block can be represented as:

$$y = \mathcal{F}(x, \{W_i\}) + x$$

where $x$ is the input, $\mathcal{F}$ is the residual function, and $W_i$ are weights. The target point coordinates are processed through a linear layer to expand their dimensions to match the perception features, and both are concatenated before feeding into the planning network. The planning network, composed of CNN and MLP layers with ReLU activation, outputs a sequence of $n$ keypoints $K_t = \{p_1, p_2, \dots, p_n\}$ representing the planned path.

Training the network involves a loss function derived from ESDF cost maps and additional task losses. The ESDF map provides a differentiable representation of obstacle distances, where each cell contains the Euclidean distance to the nearest obstacle. The cost $C$ for a path $K_t$ is calculated as:

$$C(K_t) = \alpha C_O(K_t) + \beta C_G(K_t) + \gamma C_M(K_t, p_R, p_G)$$

Here, $C_O$ is the obstacle cost based on the ESDF values $H(p_i)$ at keypoints:

$$C_O(K_t) = \sum_{i=1}^{m} H(p_i) \quad \text{for} \quad p_i \in K_t$$

$C_G$ is the goal cost, measuring the Euclidean distance $E$ from the path endpoint to the target $p_G$:

$$C_G(K_t) = E(p_m, p_G)$$

and $C_M$ is the smoothness cost to minimize path length and abrupt changes:

$$C_M(K_t, p_R, p_G) = \frac{1}{n} \sum_{i=0}^{n-1} E_K(p_i, p_{i+1}) – \frac{E(p_R, p_G)}{n}$$

where $E_K$ computes the path segment length. The hyperparameters $\alpha$, $\beta$, and $\gamma$ balance these costs. Additionally, a collision probability $\mu_t$ output by the network is used in a binary cross-entropy loss $L(K_t)$ to enhance safety. The total training loss $F$ is:

$$F = C(K_t) + L(K_t)$$

During training, gradients of $F$ with respect to network parameters $\theta$ are computed as:

$$\nabla_\theta F = \frac{\partial F}{\partial C} \frac{\partial C}{\partial f} \frac{\partial f}{\partial \theta} + \frac{\partial F}{\partial L} \frac{\partial L}{\partial \theta}$$

and parameters are updated via gradient descent:

$$\theta_{i+1} = \theta_i – \omega \nabla_\theta F$$

where $\omega$ is the learning rate. This iterative optimization enables the quadruped robot to learn efficient, collision-free paths without supervised labels.

Experimental Setup and Training

To validate our system, we conducted experiments in both simulated and physical environments. Training data consisted of approximately 8,000 depth images collected from four simulated environments in Gazebo and one real-world laboratory setting. The Gazebo simulations were built using ROS and included varied obstacle configurations to mimic real-world challenges. Training was performed on an Nvidia GeForce RTX 4070 GPU, converging after 44 epochs in about 6 hours, as shown by the stabilized loss curve. The network demonstrated effective learning of path planning strategies for the robot dog.

In simulation, the quadruped robot successfully navigated to target points in unknown environments with a success rate exceeding 90%. The planned paths avoided static obstacles efficiently, showcasing the system’s capability in handling complex scenarios. For physical experiments, we used the SDUQuad platform in a lab with randomly placed cardboard boxes as obstacles. The robot dog autonomously adjusted its trajectory to avoid collisions, achieving a navigation success rate of over 85% in dynamic settings where obstacles were moved during operation. Comparative tests with traditional A* path planning highlighted the superiority of our method; while A* failed to adapt to dynamic obstacles, leading to collisions, our imperative approach dynamically replanned paths, ensuring safe navigation.

| Method | Environment Type | Success Rate (%) | Dynamic Obstacle Handling | Dependency on Prior Maps |

|---|---|---|---|---|

| Traditional A* | Static | ~70 | Poor | Yes |

| Imperative Learning (Ours) | Static & Dynamic | >90 (Sim), >85 (Phys) | Excellent | No |

Key performance metrics are summarized in Table 1, illustrating the robustness of our quadruped robot system. The imperative learning method significantly reduces latency and errors associated with modular systems, enabling real-time responses in unpredictable environments.

Results and Analysis

The experimental results confirm the effectiveness of the end-to-end imperative path planning system for quadruped robots. In static obstacle scenarios, the robot dog consistently generated smooth paths around obstacles, as visualized in trajectory plots. For dynamic tests, when humans or moving obstacles entered the navigation path, the system quickly recalculated routes to avoid collisions. The ESDF cost map played a crucial role in this by providing a differentiable guidance signal for path optimization. The overall success rates, coupled with the ability to handle environmental changes, underscore the adaptability of this robot dog platform.

Mathematically, the path efficiency can be evaluated using the average path length $L_{\text{avg}}$ and the collision rate $R_c$. For $N$ trials, $L_{\text{avg}}$ is computed as:

$$L_{\text{avg}} = \frac{1}{N} \sum_{j=1}^{N} \sum_{i=1}^{n-1} E(p_i, p_{i+1})_j$$

and $R_c$ as:

$$R_c = \frac{\text{Number of collisions}}{N}$$

In our experiments, $L_{\text{avg}}$ was minimized due to the smoothness cost $C_M$, and $R_c$ remained below 0.1 in dynamic environments. This performance highlights how imperative learning enables quadruped robots to achieve autonomous navigation comparable to trained humans, without the overhead of explicit mapping.

| Metric | Simulation | Physical Environment |

|---|---|---|

| Average Path Length (m) | 5.2 | 4.8 |

| Collision Rate | 0.05 | 0.12 |

| Success Rate (%) | 92 | 87 |

Conclusion and Future Work

We have designed and implemented an end-to-end imperative navigation system for quadruped robots, demonstrating its efficacy in unknown and dynamic environments. By leveraging deep learning and real-time sensor data, the robot dog can autonomously plan and execute paths without prior maps, overcoming limitations of traditional methods. The integration of LiDAR-based localization and depth camera-based planning ensures robust performance, with high success rates in both simulation and physical tests. Future work will focus on enhancing the network’s generalization to diverse terrains, incorporating multi-robot collaboration, and optimizing computational efficiency for longer missions. This approach paves the way for advanced applications of quadruped robots in search-and-rescue, exploration, and service domains.