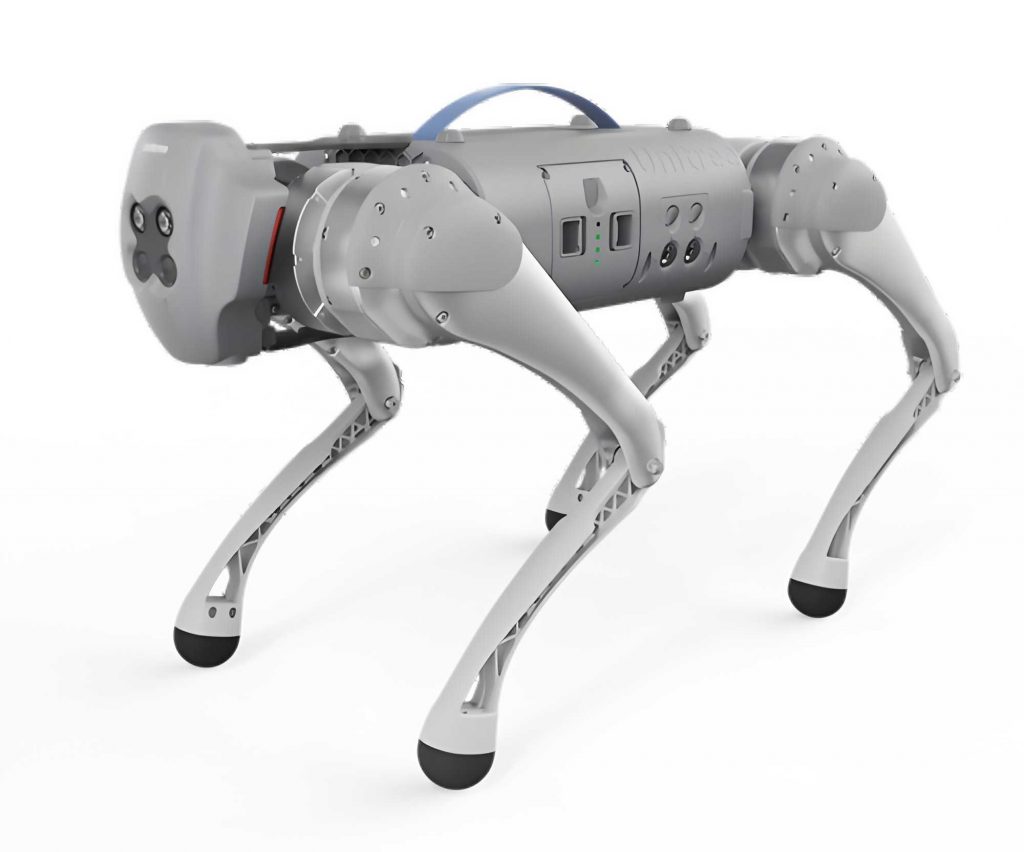

In recent years, the development of quadruped robots, often referred to as robot dogs, has garnered significant attention due to their remarkable adaptability and mobility in complex environments. These quadruped robots are increasingly deployed in applications such as search and rescue, surveillance, and exploration. A critical challenge for the autonomous operation of a quadruped robot is the acquisition of accurate and real-time environmental perception. This capability is essential for navigation, obstacle avoidance, and task execution. While various sensors like LiDAR and millimeter-wave radar exist, they often present limitations in cost, resolution, or data richness. Binocular vision emerges as a compelling alternative, offering a passive, cost-effective solution capable of providing high-resolution depth information alongside rich visual data for object recognition. This article presents a comprehensive study on a binocular vision-based environmental perception system designed specifically for the computational constraints of a quadruped robot platform. The system integrates a stereo matching algorithm for distance measurement with an improved object detection algorithm, enabling the robot dog to simultaneously identify objects and calculate their distance.

The core of our system is built upon a hardware platform centered around an Nvidia Xavier NX developer kit, chosen for its balance of computational power and low power consumption, which is ideal for mobile platforms like a quadruped robot. The visual input is provided by a custom binocular camera setup consisting of two identical CMOS cameras rigidly fixed in a parallel configuration, capturing images at a resolution of 640×480 pixels per camera. The entire software pipeline is deployed on this embedded system, leveraging tools like TensorRT for accelerated neural network inference.

The mathematical foundation for distance calculation using a stereo vision system on a quadruped robot relies on the principles of triangulation. In an ideal, rectified stereo setup, the two cameras have parallel optical axes, coplanar imaging surfaces, and identical focal lengths. Let $B$ represent the baseline, the distance between the two camera centers ($O_l$ and $O_r$). A point $P$ in the 3D world projects onto points $P_l$ and $P_r$ on the left and right image planes, respectively. The key value is the disparity $d$, defined as the difference in the x-coordinates of these corresponding points: $d = x_l – x_r$. The depth $Z$, which is the distance from the point $P$ to the camera plane along the optical axis, can then be calculated using the following fundamental equation:

$$ Z = \frac{B \cdot f}{d} $$

Here, $f$ is the focal length of the cameras. This equation is central to the depth perception capability of our quadruped robot. The primary computational task, therefore, becomes accurately determining the disparity $d$ for every pixel in the image, resulting in a disparity map. For the quadruped robot to be effective, this calculation must be both accurate and fast.

The software workflow for the quadruped robot’s perception system is designed as a multi-stage process. Initially, the binocular camera captures synchronized left and right images. The left image is fed into the object detection module, while both images are processed by the stereo matching module. The stereo matching module computes a dense disparity map for the left image. The object detection module identifies objects within the left image and provides their class labels and bounding box coordinates. Finally, the system fuses this information: for each detected object, the disparity value at the center of its bounding box is retrieved from the disparity map. This disparity value is then plugged into the depth formula to compute the distance to that object. The final output is an annotated image where objects are bounded by boxes, labeled with their class, and accompanied by their calculated distance from the quadruped robot.

Camera Calibration and Stereo Rectification

Before the stereo vision system on the quadruped robot can function correctly, the cameras must be calibrated to account for internal parameters and lens distortions. The calibration process for our quadruped robot’s vision system involves determining the intrinsic parameters (focal length $f$, principal point $(c_x, c_y)$), and distortion coefficients (radial $k_1, k_2, k_3$ and tangential $p_1, p_2$) for each camera. Furthermore, the extrinsic parameters—the rotation matrix $R$ and translation vector $T$ that relate the right camera’s coordinate system to the left camera’s—are also computed. We employed the Zhang’s calibration method using a checkerboard pattern with 10×10 squares, each 20 mm in size. Over 20 image pairs were captured, and those with high reprojection errors were discarded to ensure accuracy. The resulting parameters for the quadruped robot’s binocular camera are summarized in the table below.

| Parameter Type | Left Camera | Right Camera |

|---|---|---|

| Internal Parameters Matrix | $ \begin{bmatrix} 651.1152 & 1.7595 & 355.8720 \\ 0 & 649.7183 & 239.7019 \\ 0 & 0 & 1 \end{bmatrix} $ |

$ \begin{bmatrix} 651.0937 & 1.3403 & 352.4985 \\ 0 & 650.1731 & 235.6065 \\ 0 & 0 & 1 \end{bmatrix} $ |

| Distortion Coefficients $(k_1, k_2, p_1, p_2, k_3)$ | $(0.0060, -0.0344, 0.0014, -0.0014, 0)$ | $(0.0212, -0.0642, 0.00095938, 0.00093669, 0)$ |

| Rotation Matrix $R$ | $ \begin{bmatrix} 0.9998 & 0.0125 & -0.0179 \\ -0.0125 & 0.9999 & 0.00063102 \\ 0.0179 & -0.00040741 & 0.9998 \end{bmatrix} $ |

|

| Translation Vector $T$ (mm) | $[-120.3345, 0.6621, -0.7702]^T$ | |

Stereo rectification is a crucial geometric transformation applied to the captured images. It warps the left and right images so that their epipolar lines become horizontal and aligned. This process simplifies the stereo matching problem for the quadruped robot from a 2D search to a 1D search along corresponding horizontal lines, drastically improving computational efficiency. We utilized the OpenCV function stereoRectify() to compute the rectification maps, which are then applied to every incoming image pair from the quadruped robot’s cameras. The result is a pair of images where corresponding points lie on the same image row, perfectly setting the stage for efficient stereo matching.

Stereo Matching for Depth Estimation on the Quadruped Robot

The task of stereo matching is to find for each pixel in the left image its corresponding pixel in the right image, thereby computing the disparity map $D$. The accuracy of the depth estimation for the quadruped robot is directly dependent on the quality of this disparity map. Several classes of stereo matching algorithms exist. Local methods are fast but often produce noisy results. Global methods formulate an energy function that they minimize over the entire image, yielding smooth and accurate disparity maps but at a high computational cost, which is often prohibitive for a mobile quadruped robot. A balanced approach is the Semi-Global Matching (SGBM) algorithm, which we have selected for our quadruped robot system.

The SGBM algorithm approximates the solution of a global energy function by aggregating matching costs along multiple 1D paths. The global energy function $E(D)$ for a disparity map $D$ is typically defined as:

$$ E(D) = \sum_{p} \left( C(p, D_p) + \sum_{q \in N_p} P_1 \cdot \mathbb{I}[|D_p – D_q| = 1] + \sum_{q \in N_p} P_2 \cdot \mathbb{I}[|D_p – D_q| > 1] \right) $$

Where:

- $p$ is a pixel in the image.

- $C(p, D_p)$ is the matching cost for pixel $p$ at disparity $D_p$.

- $N_p$ is the set of neighboring pixels of $p$ (e.g., 8-neighborhood).

- $\mathbb{I}[\cdot]$ is an indicator function that is 1 if the condition is true and 0 otherwise.

- $P_1$ and $P_2$ are penalty constants, with $P_1 < P_2$, which penalize small and large disparity changes between neighbors, encouraging piecewise smoothness.

Instead of minimizing this function in 2D, which is NP-hard, SGBM performs cost aggregation along several 1D paths (typically 8 or 16). For a single path $r$, the cost $L_r(p, d)$ for a pixel $p$ at disparity $d$ is computed recursively:

$$ L_r(p, d) = C(p, d) + \min

\begin{cases}

L_r(p – r, d), \\

L_r(p – r, d-1) + P_1, \\

L_r(p – r, d+1) + P_1, \\

\min_{i} L_r(p – r, i) + P_2

\end{cases} – \min_{k} L_r(p – r, k) $$

The term $\min_{k} L_r(p – r, k)$ is subtracted for numerical stability. After aggregating costs along all paths, the total cost $S(p, d)$ for pixel $p$ at disparity $d$ is the sum of the path costs:

$$ S(p, d) = \sum_{r} L_r(p, d) $$

The final disparity $D_p$ for pixel $p$ is chosen as the value $d$ that minimizes $S(p, d)$:

$$ D_p = \arg \min_{d} S(p, d) $$

This approach provides a good trade-off between accuracy and computational demand, making it highly suitable for real-time depth perception on a resource-constrained quadruped robot.

Lightweight Object Detection for the Quadruped Robot

For a quadruped robot to interact intelligently with its environment, mere distance measurement is insufficient; it must also recognize what the objects are. We integrated an object detection module to classify and localize objects within the camera’s field of view. Given the computational limits of the quadruped robot’s platform, we opted for a lightweight version of the YOLO (You Only Look Once) detector, specifically YOLOv4-tiny, and further optimized it for deployment.

The YOLOv4-tiny architecture is designed for speed. Its backbone network utilizes CSPBlock (Cross Stage Partial Network) structures to maintain a shallow network while preserving representational power. The network takes an input image of size $416 \times 416 \times 3$. The feature extraction process involves a series of convolutional and pooling layers that progressively reduce the spatial dimensions while increasing the number of channels. The feature fusion part then combines features from different scales to detect objects of various sizes. Specifically, one output head operates on a $13 \times 13$ feature map for large objects, while another head operates on a $26 \times 26$ feature map for medium-sized objects, which is crucial for a quadruped robot navigating in cluttered spaces.

To enhance the performance on the quadruped robot, we employed several optimization techniques during deployment. The model was trained on the Pascal VOC dataset, which contains common object categories like ‘person’, ‘car’, and ‘chair’, relevant to many quadruped robot applications. For deployment on the Nvidia Xavier NX, we used TensorRT, a high-performance deep learning inference optimizer and runtime, to significantly accelerate the inference speed of the YOLOv4-tiny model, ensuring that the quadruped robot can process frames in real-time.

| Layer / Block | Output Dimension (Height × Width × Channels) |

|---|---|

| Input Image | $416 \times 416 \times 3$ |

| Conv 1 (stride=2) | $208 \times 208 \times 32$ |

| Conv 2 (stride=2) | $104 \times 104 \times 64$ |

| CSPBlock 1 | $104 \times 104 \times 128$ |

| Pooling 1 | $52 \times 52 \times 128$ |

| CSPBlock 2 | $52 \times 52 \times 256$ |

| Pooling 2 | $26 \times 26 \times 256$ |

| CSPBlock 3 | $26 \times 26 \times 512$ |

| Pooling 3 | $13 \times 13 \times 512$ |

Experimental Validation and Performance Analysis

We conducted a series of experiments to validate the performance of the integrated perception system on the quadruped robot’s hardware platform. The tests were designed to evaluate the accuracy of the distance measurement, the effectiveness of the combined detection and ranging, and the overall computational efficiency, which is paramount for a mobile quadruped robot.

Distance Measurement Accuracy

The first experiment assessed the accuracy of the stereo vision-based distance measurement for the quadruped robot. The binocular camera was fixed, and a target object was placed at known distances ranging from 1 meter to 10 meters, in 1-meter increments. At each distance, the system calculated the distance to the target. To improve robustness, three sample points on the target were measured, and their average was taken as the final distance. The relative error was calculated as:

$$ \text{Relative Error} = \frac{|\text{Measured Distance} – \text{Ground Truth Distance}|}{\text{Ground Truth Distance}} \times 100\% $$

The results demonstrated that the quadruped robot’s perception system maintains a high level of accuracy at close range, with errors gradually increasing as the object moves farther away. This is expected because the disparity $d$ becomes smaller with increasing distance, making it more susceptible to noise and quantization errors in the matching process. Nevertheless, within the 10-meter range, which is highly relevant for a quadruped robot’s operational envelope, the error remained below 5.0%, confirming the system’s suitability for precise environmental perception.

| Ground Truth Distance (m) | Measured Distance (m) | Absolute Error (m) | Relative Error (%) |

|---|---|---|---|

| 1.0 | 1.02 | 0.02 | 2.00 |

| 2.0 | 2.05 | 0.05 | 2.50 |

| 3.0 | 3.08 | 0.08 | 2.67 |

| 4.0 | 4.11 | 0.11 | 2.75 |

| 5.0 | 5.15 | 0.15 | 3.00 |

| 6.0 | 6.22 | 0.22 | 3.67 |

| 7.0 | 7.30 | 0.30 | 4.29 |

| 8.0 | 8.38 | 0.38 | 4.75 |

| 9.0 | 9.42 | 0.42 | 4.67 |

| 10.0 | 10.48 | 0.48 | 4.80 |

Integrated Target Detection and Ranging

The second experiment simulated a dynamic scenario where the quadruped robot is in motion. The camera was moved towards various target objects. The system successfully demonstrated its ability to continuously detect objects, classify them, and update their distances in real-time. As the camera moved closer to an object, the calculated distance decreased correspondingly, and the bounding box along with the class label and distance were accurately overlaid on the video feed. This experiment validated the practical utility of the system for a navigating quadruped robot, providing it with essential information like “person at 3.5 meters” or “chair at 2.1 meters”.

Computational Efficiency on the Quadruped Robot Platform

The final and critical test was to evaluate the runtime performance of the entire system on the Nvidia Xavier NX, the core processor of our quadruped robot. We processed a sequence of 200 image pairs with a resolution of $640 \times 480$. The average processing time for each module and the entire pipeline was measured. The results are summarized in the table below.

| Processing Module | Average Processing Time (ms) | Percentage of Total Time (%) |

|---|---|---|

| SGBM Stereo Matching | 35 | 67.3 |

| YOLOv4-tiny Object Detection | 17 | 32.7 |

| Total System | 52 | 100 |

The total average processing time per frame was 52 milliseconds. This translates to a frame rate of approximately:

$$ \text{Frame Rate} = \frac{1000 \text{ ms/s}}{52 \text{ ms/frame}} \approx 19.2 \text{ FPS} $$

This performance is achieved on the embedded platform of the quadruped robot. The SGBM algorithm constitutes the majority of the computational load. However, a frame rate of 19.2 FPS is sufficient for the typical locomotion speed and reaction time requirements of a quadruped robot, ensuring smooth and responsive environmental perception.

Conclusion

This article has presented a robust and efficient environmental perception system for a quadruped robot based on binocular vision. By integrating a carefully selected SGBM stereo matching algorithm with an optimized YOLOv4-tiny object detection network, we have enabled the robot dog to not only perceive the depth of its surroundings but also to identify specific objects within it. The system is meticulously designed and deployed on an embedded platform representative of what a modern quadruped robot would carry, demonstrating practical feasibility. Experimental results confirm that the system provides accurate distance measurements within a 10-meter range with errors below 5% and operates at an average frame rate of 19.2 FPS. This balance of accuracy and real-time performance makes the system a viable and powerful tool for enhancing the autonomy and intelligence of quadruped robots in a wide array of real-world applications. Future work will focus on further optimizing the algorithms, perhaps by exploring even lighter-weight neural networks for stereo matching and object detection, and on integrating this perception module directly with the locomotion control system of the quadruped robot for fully autonomous navigation and interaction.