In recent years, legged robots have demonstrated remarkable potential in navigating complex and hazardous environments, where they can perform tasks safely and efficiently, reducing human workload and ensuring safety. Among legged robots, quadruped robots, inspired by four-legged mammals, exhibit superior stability compared to bipedal robots and simpler leg structures than hexapod robots, making them easier to control. However, traditional control methods for quadruped robots often involve intricate pipelines, including state estimation, contact scheduling, trajectory optimization, foot placement planning, model predictive control, and operational space control. Designing these components requires extensive expertise, and obtaining accurate dynamic models of robots remains challenging.

Reinforcement learning (RL) has emerged as a powerful approach for robot motion learning, enabling agents to learn through trial-and-error interactions with the environment. By applying deep reinforcement learning algorithms to gait planning and motion control of quadruped robots, engineers can avoid tedious manual tuning and achieve optimal control performance. For instance, methods like Twin Delayed Deep Deterministic Policy Gradient (TD3) have shown promising results in continuous control tasks. However, TD3 suffers from Q-value underestimation due to its fixed selection of the minimum Q-value for updates, leading to inaccurate value estimation and degraded learning efficiency. To address this, we propose a Randomized Ensembled Network TD3 (RE-TD3) algorithm, which integrates multiple Q-networks and randomly selects Q-networks for evaluation, mitigating value inaccuracies and enhancing policy performance.

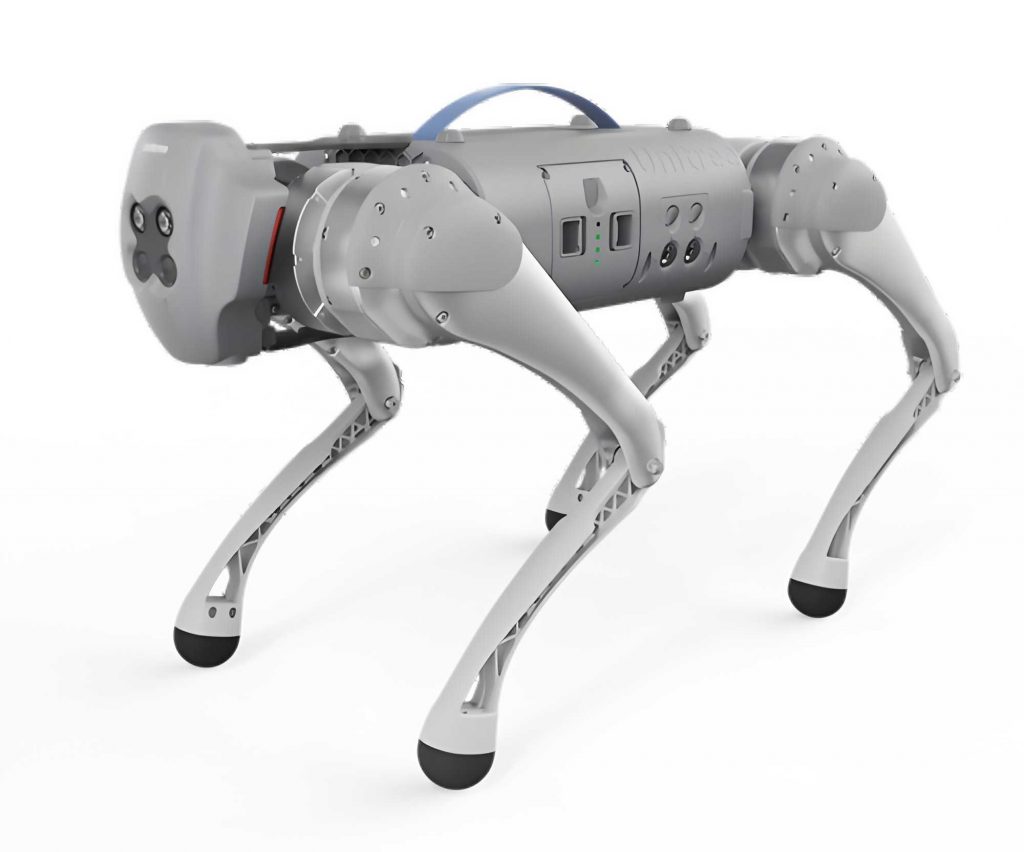

In this work, we focus on gait learning for quadruped robots, specifically the robot dog Laikago, using our RE-TD3 algorithm. We design appropriate state and action spaces, along with a reward function that guides the robot to learn stable and energy-efficient walking gaits. Simulation experiments on the PyBullet platform demonstrate that RE-TD3 outperforms mainstream RL algorithms like TD3, Soft Actor-Critic (SAC), Proximal Policy Optimization (PPO), and Deep Deterministic Policy Gradient (DDPG) in terms of reward convergence, body stability, and directional accuracy. Our contributions include the novel RE-TD3 algorithm, a tailored reward function for quadruped gait learning, and comprehensive experimental validation.

Related Work

Reinforcement learning is a branch of machine learning where an agent learns to make decisions by interacting with an environment to maximize cumulative rewards. Unlike supervised learning, which relies on labeled datasets, RL agents explore actions based on the current state to receive rewards, iteratively refining their policy until optimal behavior is learned. The interaction process between the agent and environment is depicted in Figure 1, where the agent observes the state $s_t$, takes an action $a_t$, and receives a reward $r_t$ and next state $s_{t+1}$.

The RL problem can be formalized as a Markov Decision Process (MDP), represented by the tuple $M = \langle S, A, P, R, \gamma \rangle$, where $S$ is the state space, $A$ is the action space, $P$ is the state transition probability, $R$ is the reward function, and $\gamma \in [0,1]$ is the discount factor balancing immediate and future rewards. The state-action value function $Q^\pi(s, a)$ represents the expected cumulative reward after taking action $a$ in state $s$ under policy $\pi$, defined as:

$$Q^\pi(s, a) = \mathbb{E}_\pi \left[ R_t \mid s, a \right]$$

where $R_t$ is the reward at time $t$. The Bellman equation relates the value function to future rewards:

$$Q^\pi(s, a) = \mathbb{E}_\pi \left[ R_t + \gamma Q^\pi(s_{t+1}, a_{t+1}) \mid s, a \right]$$

Traditional RL algorithms like Q-learning and SARSA iteratively solve the Bellman equation to maximize the value function and derive the optimal policy $\pi^*$:

$$\pi^* = \arg\max_a \mathbb{E}[R]$$

In the context of quadruped robots, RL has been successfully applied to gait learning. For example, Tan et al. used reference trajectory-based deep RL to train trot and gallop gaits for the Minitaur robot in simulation, eliminating manual parameter tuning. Hwangbo et al. employed Trust Region Policy Optimization (TRPO) to train a policy network in simulation and deployed it on the ANYmal robot, achieving performance comparable to model-based controllers. Margolis et al. proposed a structured motion controller that learned multiple gaits like trotting and bounding by adjusting reward functions and motion parameters. However, these methods often rely on complex reward shaping and may suffer from sample inefficiency.

TD3 algorithm, an extension of DDPG, incorporates Double Q-learning to address overestimation bias in Q-values. It uses two critic networks and selects the minimum Q-value for target updates, as shown in Figure 2. Despite its improvements, TD3’s fixed min-selection can lead to underestimation and suboptimal exploration. Our RE-TD3 algorithm builds on TD3 by integrating multiple Q-networks and introducing randomness in Q-network selection, enhancing value estimation accuracy and policy performance for quadruped robot gait learning.

System Modeling

RE-TD3 Algorithm

The core idea of RE-TD3 is to integrate $N$ Q-networks in the critic component and randomly select a subset of these networks for target value computation, rather than relying on a fixed pair. This approach reduces the risk of systematic underestimation and improves exploration efficiency. The overall network architecture of RE-TD3 is illustrated in Figure 3.

In RE-TD3, the critic consists of $N$ Q-networks, each comprising three linear layers: an input layer receiving the concatenated state and action (49 dimensions), two hidden layers with 256 neurons each, and an output layer producing a single Q-value. The actor network processes the state observation (37 dimensions) through a fully connected layer (37×256), a hidden layer (256×256), and an output layer (256×12) that predicts the robot’s next action. All networks use ReLU activation functions and are optimized with Adam optimizer via stochastic gradient descent.

The actor network updates its parameters by maximizing the average Q-value from the $N$ networks:

$$Q = \bar{Q}(s_t, \pi_\phi(s_t))$$

where $\bar{Q}(s_t, \pi_\phi(s_t))$ is the mean Q-value over all $N$ networks. For critic updates, we randomly sample two Q-networks $Q_{\theta_a}$ and $Q_{\theta_b}$ from the ensemble and compute the target value using the minimum of their target counterparts:

$$Q_{\theta_a}, Q_{\theta_b} = \text{random}(Q_{\theta_N})$$

$$y_t = r_t + \gamma \min_{i=a,b} Q_{\theta_i’}(s_{t+1}, a’_t)$$

where $a’_t = \pi_{\phi’}(s_{t+1}) + \epsilon$ is the target action with noise $\epsilon \sim \text{clip}(\mathcal{N}(0, \sigma), -c, c)$. Each Q-network is then updated by minimizing the temporal difference error:

$$\Delta \theta_i = \nabla_{\theta_i} (y_t – Q_{\theta_i}(s_t, a_t))^2$$

The target networks are softly updated with learning rate $\tau$:

$$\theta_i’ \leftarrow \tau \theta_i + (1 – \tau) \theta_i’$$

$$\phi’ \leftarrow \tau \phi + (1 – \tau) \phi’$$

The complete RE-TD3 algorithm is summarized in Table 1.

| Step | Description |

|---|---|

| 1 | Initialize $N$ Q-network parameters $\theta_1, \theta_2, \dots, \theta_N$ and policy network parameter $\phi$. |

| 2 | Initialize target networks $\theta_i’ \leftarrow \theta_i$ for $i=1,2,\dots,N$ and $\phi’ \leftarrow \phi$. |

| 3 | Initialize replay buffer $\beta$. |

| 4 | For each episode:

|

Gait Learning for Quadruped Robots

We apply RE-TD3 to gait learning for the quadruped robot Laikago. To accelerate training, we incorporate an open-loop controller that provides rhythmic signals as motion priors and reference trajectories. The robot’s control action is the sum of the open-loop controller output and the neural network’s predicted action.

The learning control process is depicted in Figure 4. The state observation space has 37 dimensions, including:

- Joint angles and angular velocities (12 × 2 = 24 dimensions)

- Binary foot contact detection (4 dimensions)

- Distance sensor data (robot position, 3 dimensions)

- Orientation (pitch, roll, yaw) and linear acceleration (3 × 2 = 6 dimensions)

The action space consists of 12 dimensions, representing desired joint angles for each motor. The policy network takes the 37-dimensional state as input and predicts the next action. After executing the action, the robot transitions to a new state, and the network parameters are updated based on the reward. The combined action is tracked by a PD controller to achieve precise leg motion control.

Reward Function Design

The reward function is designed to encourage stable, efficient, and energy-conscious locomotion. It consists of three components:

$$r_t = k_1 r_t^v + k_2 r_t^o + k_3 r_t^e$$

where $k_1 = 0.3$, $k_2 = 0.5$, and $k_3 = 0.2$ are weighting coefficients that balance the importance of each term. These values ensure that stability and directionality are prioritized while maintaining reasonable energy efficiency.

The velocity reward $r_t^v$ encourages the robot to move in the desired direction:

$$r_t^v = d_t \cdot (P_{t-1} – P_t)$$

where $P_t$ is the robot’s position at time $t$, and $d_t$ is the desired direction vector.

The stability reward $r_t^o$ penalizes excessive body orientation deviations:

$$r_t^o = 1 – \tan[0.16(r^2 + p^2)]$$

where $r$ and $p$ are the roll and pitch angles measured by the IMU. This term ensures the robot maintains a stable posture during locomotion.

The energy reward $r_t^e$ discourages high energy consumption:

$$r_t^e = -E_t$$

where $E_t$ is the energy expended at time $t$. Minimizing energy usage promotes efficient gait patterns.

By combining these rewards, the robot learns to walk steadily in the desired direction while conserving energy, resulting in a robust and efficient gait for the quadruped robot.

Simulation Experiments

Experimental Setup

We conduct simulation experiments in PyBullet to validate the performance of RE-TD3 in gait learning for quadruped robots. The environment is a flat terrain where the robot dog Laikago learns to walk from scratch. The world coordinate system defines the robot’s position, with the x-axis as the forward direction, z-axis vertical, and y-axis determined by the right-hand rule.

The robot dog Laikago is a 12-degree-of-freedom quadruped robot with three joints per leg: hip, swing, and knee joints, mimicking the posture of real quadruped animals. Key parameters are listed in Table 2.

| Parameter | Value |

|---|---|

| Standing Dimensions (mm) | 370 × 270 × 295 |

| Mass (kg) | 11 |

| Joint Degrees of Freedom | 12 |

| Hip Joint Range | -46° to 46° |

| Swing Joint Range | -60° to 240° |

| Knee Joint Range | -154.5° to 52.5° |

We set the training steps to 1 million to ensure convergence, allowing the quadruped robot to progress from unstable movements to steady walking. Key hyperparameters for RE-TD3 are listed in Table 3. For comparison, we keep hyperparameters consistent across algorithms, with SAC’s temperature coefficient $\alpha = 0.2$ for optimal performance.

| Parameter | Value |

|---|---|

| Critic Learning Rate ($l_{cr}$) | 0.003 |

| Actor Learning Rate ($l_{ar}$) | 0.0003 |

| Discount Factor ($\gamma$) | 0.99 |

| Soft Update Rate ($\tau$) | 0.005 |

| Batch Size | 256 |

| Max Steps per Episode | 600 |

| Replay Buffer Capacity | 1,000,000 |

Results and Analysis

First, we determine the optimal value of $N$ for RE-TD3 by comparing different $N$ values under identical conditions. As shown in Figure 5, $N=4$ achieves the fastest convergence at around 400,000 steps, with a stable reward of approximately 3300. Other $N$ values exhibit slower convergence or oscillations, indicating that $N=4$ is optimal for our task.

Figure 6 illustrates the gait of the robot dog at different training stages. In the early stage (100,000 steps), the legs swing erratically, and the center of mass height varies irregularly, indicating unstable walking. By the mid-stage (300,000 steps), the legs begin rhythmic movements, and the center of mass stabilizes, though the body still leans forward. In the final stage (400,000 steps), the legs exhibit coordinated, rhythmic motions, and the robot maintains a steady pace and posture, successfully learning an efficient gait.

To evaluate RE-TD3’s superiority, we compare it with TD3, SAC, PPO, and DDPG in terms of reward value, center of mass height stability, and deviation from the desired direction.

Reward Value: The reward curves in Figure 7 show that RE-TD3 converges rapidly to around 3300 after 400,000 steps and maintains high stability. TD3 converges slower to about 2500 with fluctuations, SAC converges early but plateaus at 2500, DDPG exhibits severe oscillations without convergence, and PPO starts with prior knowledge but stabilizes at 2800. RE-TD3 achieves higher rewards with faster convergence, demonstrating its efficiency.

Center of Mass Height: We assess body stability by measuring the deviation of the center of mass height in the z-axis from the initial height. As shown in Figure 8, RE-TD3 results in a height variation between 0.23 m and 0.25 m (2 cm fluctuation), indicating stable locomotion. In contrast, TD3 shows variations from 0.2 m to 0.26 m (3 cm), SAC from 0.24 m to 0.28 m (2 cm but higher baseline), DDPG exhibits severe instability, and PPO fluctuates by 8 cm. RE-TD3 provides the most stable gait for the quadruped robot.

Directional Deviation: We measure the deviation along the y-axis to evaluate how well the robot walks straight. Figure 9 shows that RE-TD3 maintains a minimal deviation of around 0.2 m, closely following the desired path. TD3 deviates by -0.5 m, SAC by up to 2 m, PPO by 0.3 m, and DDPG by 0.5 m. RE-TD3’s low deviation confirms its ability to learn a straight-walking gait for the robot dog.

Conclusion

We proposed the RE-TD3 algorithm, which integrates multiple Q-networks and introduces randomness in network selection to address Q-value underestimation in TD3. By determining the optimal ensemble size $N=4$, we enhanced value estimation accuracy and policy performance for quadruped robot gait learning. Our method includes a tailored reward function that promotes stable, energy-efficient, and directional locomotion.

Simulation experiments on the PyBullet platform demonstrated that RE-TD3 enables the robot dog Laikago to learn a stable trotting gait from scratch, outperforming TD3, SAC, PPO, and DDPG in reward convergence, body stability, and directional accuracy. Specifically, RE-TD3 improved body stability by 33%, reward value by 32%, and reduced walking deviation by 60% compared to the next best algorithm.

However, this study has limitations. The algorithm has not been deployed on a physical robot, and the experiments were conducted only on flat terrain without obstacles. Future work will focus on real-world deployment and extending the method to complex environments with obstacles and uneven terrain, enabling the quadruped robot to learn adaptive gaits under challenging conditions.