In recent years, with the rapid advancement of intelligent control technologies, quadruped robots have demonstrated remarkable capabilities in traversing complex and rugged unstructured terrains swiftly and robustly. These robot dogs are widely deployed in industrial inspection, security and rescue operations, and military exploration missions. High-precision localization is fundamental to achieving these tasks. While the Global Navigation Satellite System (GNSS) offers extensive coverage and high accuracy, its performance degrades in environments such as electromagnetically noisy factories or densely forested areas due to signal occlusion and multipath effects. To address these challenges, quadruped robots are often equipped with various external environment-interactive sensors and proprioceptive sensors, leveraging sensor fusion techniques to enhance localization reliability. External sensors like LiDAR and vision are susceptible to interference in complex environments, such as significant illumination changes or insufficient geometric and textural information. In contrast, proprioceptive sensors like leg odometry and Inertial Measurement Units (IMUs) offer high autonomy, strong environmental adaptability, and low cost. When external perception fails, inertial navigation systems become the core for maintaining localization accuracy. Thus, improving inertial positioning precision is crucial for quadruped robots to achieve accurate navigation in complex environments.

To mitigate nonlinear errors in sensor fusion algorithms, researchers have proposed various solutions. For instance, invariant extended Kalman filtering (InEKF) has been designed for legged robot pose estimation, leveraging the group-affine property of contact-inertial systems to correct base position using leg odometry observations. Other approaches involve deriving error kinematics with SO(3) manifold structures or minimizing cost functions over fixed time windows. However, these methods often do not address observation errors in leg odometry, leading to inevitable long-distance positioning errors. Accurate contact event estimation is a prerequisite for leg odometry observations. While probabilistic models and threshold-based methods have been used to assess footstep stability, they are prone to misdetection with changing motion states. Recent advancements incorporate deep learning for contact event estimation, using one-dimensional convolutional networks to fuse joint encoders, base IMU, and forward kinematics constraints. Nonetheless, these methods may overlook temporal dependencies and involve high parameter dimensions. To compensate for the strict foot-end stationary assumption in leg odometry, additional consumer-grade IMUs mounted on the feet have been used in extended Kalman filters (EKF) to estimate foot position and velocity, reducing long-distance errors. However, EKF state dimensions scale with the number of legs, limiting scalability.

To overcome these limitations, this paper proposes an inertial navigation algorithm for quadruped robots assisted by foot-end inertial information. The algorithm leverages foot-end IMU data combined with lever arm effects to compute foot-ground contact velocity, constructing an enhanced leg odometry observation model that compensates for velocity loss in traditional models. A robust stationary interval contact event estimator based on a Temporal Convolutional Network (TCN) and Bidirectional Gated Recurrent Unit (BiGRU) is developed to accurately identify contact events by extracting long- and short-term temporal features from foot-end inertial and joint encoder data. The proposed leg odometry is integrated as measurement information into an invariant extended Kalman filter (InEKF) to correct inertial navigation system errors during stationary intervals. This approach does not rely on external information, minimizes cumulative position errors, and maintains high localization accuracy over extended periods in various outdoor terrains.

System Framework and Fundamental Theory

The overall framework of the proposed algorithm is illustrated in Figure 1. The proprioceptive sensors include a base IMU, foot-end IMUs, and joint encoders. First, leg odometry is enhanced using foot-end inertial information and joint angles. Then, a TCN-BiGRU network-based stationary interval contact event estimator determines stable contact intervals. To ensure measurement accuracy, measurement mismatch isolation and adaptive noise methods are incorporated. Finally, an InEKF fuses the measurement information with the base Inertial Navigation System (INS) mechanical alignment to output accurate pose results.

To accurately describe the pose relationships during quadruped robot motion, coordinate systems are defined as follows: the navigation frame (n-frame), the base frame (b-frame) coinciding with the base IMU frame, and the foot-end IMU frame (f-frame). The INS mechanical alignment in the navigation frame estimates the state including attitude $R_b^n$, velocity $v^n$, and position $p^n$. Given the high noise of MEMS gyroscopes, the Earth’s rotation rate is neglected, leading to simplified navigation equations:

$$ \dot{R}_b^n = R_b^n [\omega^b]_\times $$

$$ \dot{v}^n = R_b^n a^b + g^n $$

$$ \dot{p}^n = v^n $$

Here, $[\cdot]_\times$ denotes the skew-symmetric matrix of a vector, $g^n$ is the gravitational acceleration vector in the n-frame, and $\omega^b$ and $a^b$ represent gyroscope and accelerometer outputs, respectively. For low-cost IMUs, the measurement equations are:

$$ \omega^b = \tilde{\omega}^b – b_\omega – n_\omega $$

$$ a^b = \tilde{a}^b – b_a – n_a $$

where $\tilde{\omega}^b$ and $\tilde{a}^b$ are true angular velocity and acceleration, $b_\omega$ and $b_a$ are biases, and $n_\omega$ and $n_a$ are zero-mean Gaussian white noises. When the robot dog’s foot contacts the ground without slipping, the foot-end position resolution equation is:

$$ \dot{d}^n = R_b^n \phi(\tilde{\alpha}) (- n_v) $$

where $d^n$ is the foot position in the n-frame, $\phi(\tilde{\alpha})$ is the forward kinematics attitude function from the f-frame to the b-frame, $\tilde{\alpha}$ is the true joint angle measurement, and $n_v$ is zero-mean Gaussian white noise accounting for potential slip and joint encoder noise.

The contact-aided InEKF error state equation is derived by combining the INS mechanics and foot-end position equations. The group state $\chi_t \in SE_{N+2}(3)$ and its dynamics are defined, with $N$ being the number of foot contact points. The right-invariant error form $\eta_t^r$ between the true state $\chi_t$ and estimated state $\hat{\chi}_t$ is used, leveraging the group-affine property to ensure linear error propagation. The error dynamics are linearized, and the state is augmented to include bias errors, resulting in the state transition matrix $F_t$ and noise driving matrix $G_t$. The InEKF provides strong convergence and consistency by maintaining independence from the trajectory.

Foot-End Inertial Information Assisted Leg Odometry Observation

Leg odometry for quadruped robots relies on forward kinematics to relate foot-end and base poses. The joint encoder measurements $\alpha = \tilde{\alpha} – n_\alpha$ consist of true values $\tilde{\alpha}$ and noise $n_\alpha$. The forward kinematics position function $g(\alpha)$ gives the foot-end position relative to the base in the b-frame, with Jacobian $J(\alpha)$. In the n-frame, the foot-end position and velocity are:

$$ d = p + R_b^n g(\tilde{\alpha} – n_\alpha) $$

$$ \dot{d} = v + R_b^n [\tilde{\omega}^b]_\times g(\tilde{\alpha}) + R_b^n J(\tilde{\alpha}) \dot{\tilde{\alpha}} $$

Assuming the foot-end is stationary during contact ($\dot{d} = 0$), the leg odometry observations for foot position $g(\tilde{\alpha})$ and base velocity $\tilde{v}^b$ are:

$$ g(\tilde{\alpha}) = (R_b^n)^T (d – p) + J(\tilde{\alpha}) n_\alpha $$

$$ v^b = – \left( [\tilde{\omega}^b]_\times g(\tilde{\alpha}) + J(\tilde{\alpha}) \dot{\tilde{\alpha}} \right) + (R_b^n)^T n_v $$

Here, $n_v$ aggregates noise from contact point changes, encoder errors, and base IMU gyroscope bias. For robot dogs with spherical feet, rapid gaits cause minor displacement at the contact point due to rolling, violating the stationary assumption. To observe foot velocity, an IMU is mounted on the foot. Using lever arm effects, the contact point position $r_c$ relates to the foot IMU position $r_f$ via the arm $r_l$ and deformation error $\delta_l$:

$$ r_c = r_f – (r_l + \delta_l) $$

Differentiating and setting $\dot{r}_c = 0$ yields the foot IMU velocity:

$$ v_f = R_b^n \phi(\alpha) \left( [\omega^f]_\times r_l^f \right) + \dot{\delta}_l $$

where $\omega^f$ is the foot IMU gyroscope measurement, $r_l^f$ is the lever arm in the f-frame, and $R_b^n \phi(\alpha)$ is the rotation from the f-frame to the n-frame. The enhanced base velocity observation $v^b$ becomes:

$$ v^b = \phi(\tilde{\alpha}) \left( [\tilde{\omega}^f]_\times r_l^f \right) – [\tilde{\omega}^b]_\times g(\tilde{\alpha}) – J(\tilde{\alpha}) \dot{\tilde{\alpha}} + (R_b^n)^T n_f $$

where $n_f$ is zero-mean Gaussian white noise. During contact events, foot-end angular velocity and pressure data identify stationary intervals where foot rolling is stable. The noise variance $\sigma_f$ adapts based on foot IMU acceleration:

$$ \sigma_f = \frac{ \| R_b^n \phi(\alpha) a^f + g^n \| }{ \| g^n \| } \sigma_f^0 $$

with $\sigma_f^0$ as a preset noise parameter. The measurement model for the InEKF uses foot position and base velocity observations:

$$ z_k = \begin{bmatrix} R_{b,k}^n g(\tilde{\alpha}_k) – (\hat{d}_{i,k} – \hat{p}_k) \\ R_{b,k}^n v_k^b – \hat{v}_k \end{bmatrix} = H_k \begin{bmatrix} \xi_k \\ \zeta_k \end{bmatrix} + n_k $$

where $H_k$ is the observation matrix, and $n_k$ is the observation noise. To handle measurement mismatches from contact misdetection, an innovation covariance matrix $C_k$ over a window of $N$ measurements is computed:

$$ C_k = \frac{1}{N} \sum_{j=k-N}^k z_k z_k^T = H_k P_{k+1|k} H_k^T + A_k R_k $$

If $\text{tr}(C_k) \gg \text{tr}(H_k P_{k+1|k} H_k^T + R_k)$, measurements are rejected. Otherwise, an adaptive matrix $A_k$ adjusts the measurement noise $R_k$:

$$ A_k = \left( \frac{1}{N} \sum_{j=k-N}^k z_k z_k^T – H_k P_{k+1|k} H_k^T \right) R_k^{-1} $$

$$ A_k^* = \text{diag}(a_1, \dots, a_n), \quad a_i = \max\{1, (A_k)_{ii}\} $$

$$ R_k = A_k^* R_{k-1} $$

This ensures positive definiteness and adapts to measurement quality.

Stationary Interval Contact Event Estimator Based on TCN-BiGRU Network

Accurate stationary interval contact event estimation is crucial for applying leg odometry observations. The rhythmic and periodic gait of quadruped robots implies temporal dependencies in foot sensor data, making contact event estimation a time-series classification problem. A deep learning approach融合 TCN and BiGRU networks is proposed to extract long-term and short-term temporal features. The TCN captures long-range dependencies through dilated convolutions, while the BiGRU models bidirectional short-term dependencies. The network structure is shown in Figure 5.

The contact state for each leg $l = \{\text{FL}, \text{FR}, \text{RL}, \text{RR}\}$ is $T_l \in \{0,1\}$, with 1 indicating stable contact and 0 indicating non-stable contact (no contact or impact). The overall state $T = [T_{\text{FL}} T_{\text{FR}} T_{\text{RL}} T_{\text{RR}}]$ has 16 classes, encoded as hexadecimal $T_{\text{hex}}$. The estimator uses foot IMU acceleration, angular velocity, and joint angular velocity as input. With a gait period of 0.2–0.5 s and sensor sampling at 200 Hz, a window size of 100 is used, yielding input vectors $x \in \mathbb{R}^{100 \times 36}$ after normalization.

Training labels are generated via foot pressure thresholding and inertial data post-processing to identify stationary intervals. For robots without foot pressure sensors, optimal detectors based on foot inertial data can be used. During training, batches of size 30 are used with shuffled data for generalization. A piecewise learning rate starts at 0.0025, halving every 10 epochs for 30 epochs. The Adam optimizer minimizes cross-entropy loss.

Experimental results on five terrains show high accuracy in stationary interval estimation, as summarized in Table 1. The network achieves over 96% accuracy across terrains, with precision and recall above 95%, demonstrating robustness for the quadruped robot.

| Test Route | Test Samples | Average Accuracy | Average Precision | Average Recall |

|---|---|---|---|---|

| Asphalt Road | 22838 | 0.9871 | 0.9730 | 0.9631 |

| Rough Terrain | 18476 | 0.9810 | 0.9737 | 0.9477 |

| Slope | 15981 | 0.9723 | 0.9534 | 0.9168 |

| Grass | 14686 | 0.9713 | 0.9383 | 0.9665 |

| Steps | 7670 | 0.9548 | 0.9192 | 0.9565 |

| All Terrains | 79651 | 0.9769 | 0.9511 | 0.9590 |

Experiments and Analysis

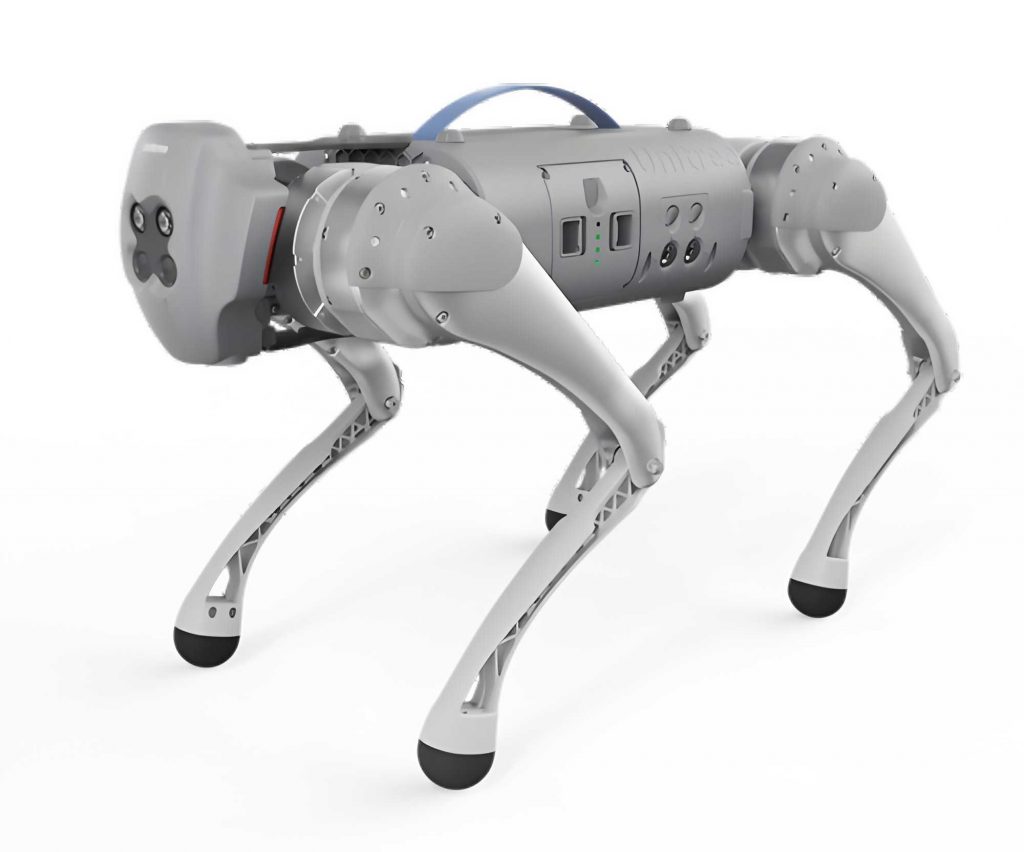

Experiments are conducted on a Unitree GO1 quadruped robot platform, equipped with foot-end IMUs, RTK for ground truth, and an embedded system running ROS at 200 Hz. Data packets are processed offline on a PC with Ubuntu 20.04, 16 GB RAM, and a 3.30 GHz CPU. The algorithm is implemented in ROS, with modules for the TCN-BiGRU contact estimator, enhanced leg odometry, and InEKF pose estimation.

First, contact event estimation is evaluated on five outdoor terrains, with the robot moving at up to 1 m/s in trot gait, performing forward, backward, lateral, and turning motions. Data from 265,501 points are split 4:3:3 into training, validation, and test sets. The TCN-BiGRU network achieves over 96% accuracy, as shown in Table 1. Precision and recall exceed 95%, indicating reliable stationary interval detection for the robot dog.

Next, the performance of foot-end inertial information-assisted leg odometry is tested on a 237.53 m outdoor non-closed-loop route. Algorithm A uses contact InEKF with the proposed contact estimator, Algorithm B is an EKF-based MIPO method, and the proposed method integrates foot-end IMU data. Positioning results are compared against RTK fixed solutions and average reference velocity over a 4 s window. Evaluation metrics include RMSE, endpoint error percentage, traveled distance, and stationary interval precision. Results are shown in Table 2 and Figure 10.

| Algorithm | East RMSE (m) | North RMSE (m) | Endpoint Error (%) | Traveled Distance (m) | Average Precision (%) |

|---|---|---|---|---|---|

| Algorithm A | 15.75 | 0.66 | 11.04 | 210.79 | 95.38 |

| Algorithm B | 1.61 | 4.36 | 3.90 | 236.34 | – |

| Proposed | 0.61 | 0.70 | 0.93 | 239.05 | 95.38 |

Algorithm A suffers from velocity loss due to the foot-end stationary assumption, leading to high endpoint error. Algorithm B compensates with foot-end IMU data but has higher north RMSE due to inconsistent contact estimation. The proposed method achieves the lowest RMSE and endpoint error, demonstrating effective velocity compensation and stable noise adaptation.

Finally, the algorithm is validated on a 290 m closed-loop route with mixed terrains (cement, grass, steps, GPS-denied corridor). Algorithms A, B, C (Algorithm B with proposed contact estimator), and the proposed method are compared. Contact estimator performance is shown in Table 3, and positioning errors in Table 4.

| Mixed Terrain Route | Test Samples | Average Accuracy | Average Precision | Average Recall |

|---|---|---|---|---|

| Test 1 | 94206 | 0.9630 | 0.9382 | 0.9219 |

| Test 2 | 89529 | 0.9660 | 0.9493 | 0.9346 |

| Route | Algorithm | East RMSE (m) | North RMSE (m) | Endpoint Error (%) |

|---|---|---|---|---|

| Test 1 | Algorithm A | 4.08 | 5.84 | 0.64 |

| Algorithm B | 6.11 | 5.88 | 6.78 | |

| Algorithm C | 1.11 | 1.37 | 0.78 | |

| Proposed | 0.70 | 0.64 | 0.20 | |

| Test 2 | Algorithm A | 5.41 | 8.11 | 1.12 |

| Algorithm B | 3.56 | 4.09 | 4.84 | |

| Algorithm C | 4.48 | 2.46 | 2.14 | |

| Proposed | 1.45 | 0.84 | 0.66 |

Algorithm A exhibits errors from velocity constraints, while Algorithm B shows significant heading drift. Algorithm C improves with the contact estimator but has reduced accuracy in Test 2 due to misdetection. The proposed method achieves the lowest average east and north RMSE (1.07 m and 0.74 m) and endpoint error (0.43%), highlighting its robustness and accuracy for the quadruped robot in mixed terrains.

Conclusion

This paper addresses the sharp decline in localization accuracy for quadruped robots under GNSS denial and environmental perception degradation by proposing an inertial navigation algorithm assisted by foot-end inertial information. The algorithm compensates for velocity loss in the foot-end stationary assumption by constructing a leg odometry observation model using foot-end IMU data. A TCN-BiGRU network accurately estimates stationary contact intervals by extracting temporal features from foot-end inertial and joint encoder data. The enhanced leg odometry is integrated into an InEKF to correct inertial navigation errors. Experimental results on outdoor non-closed-loop and mixed-terrain closed-loop routes demonstrate the algorithm’s robustness, maintaining high localization accuracy without external information. The proposed approach significantly improves navigation precision for robot dogs in complex environments, with minimal cumulative error and consistent performance across diverse terrains.