As we witness the rapid integration of artificial intelligence (AI) and robotics into various sectors, the healthcare domain stands at the forefront of this transformation. In clinical nursing, the emergence of embodied intelligence—a paradigm where AI systems interact with the physical world through perception, decision-making, and action loops—heralds a new era for intelligent robot applications. These robots are evolving from mere tools to collaborative partners, capable of adapting to dynamic environments and delivering personalized care. In this article, I explore the current landscape, core capabilities, conceptual frameworks, and future implications of intelligent robot systems in clinical nursing, drawing on advancements in embodied intelligence to outline a path toward human-robot synergy.

The fusion of AI and robotics has already demonstrated significant potential in healthcare, from surgical assistants like the Da Vinci system to rehabilitation exoskeletons. However, in nursing—a field characterized by high-touch, patient-centered care—the adoption of intelligent robot solutions has been gradual. Current applications often focus on repetitive tasks such as medication delivery, vital signs monitoring, and disinfection, which alleviate physical burdens on nurses but fall short of holistic integration. For instance, studies highlight robots performing 14 basic and 12 specialized nursing tasks, with transport assistance and intravenous infusion being common foci. Yet, these systems remain largely experimental or confined to specific settings like ICUs and operating rooms, where they assist in venipuncture or instrument management. The challenge lies in scaling these solutions to handle the complexities of general wards and home care, where emotional support and adaptive interactions are paramount. As we delve into this topic, I emphasize that the future of nursing robotics hinges on embodying intelligence—enabling robots to perceive, learn, and respond in real-time—rather than merely executing pre-programmed commands.

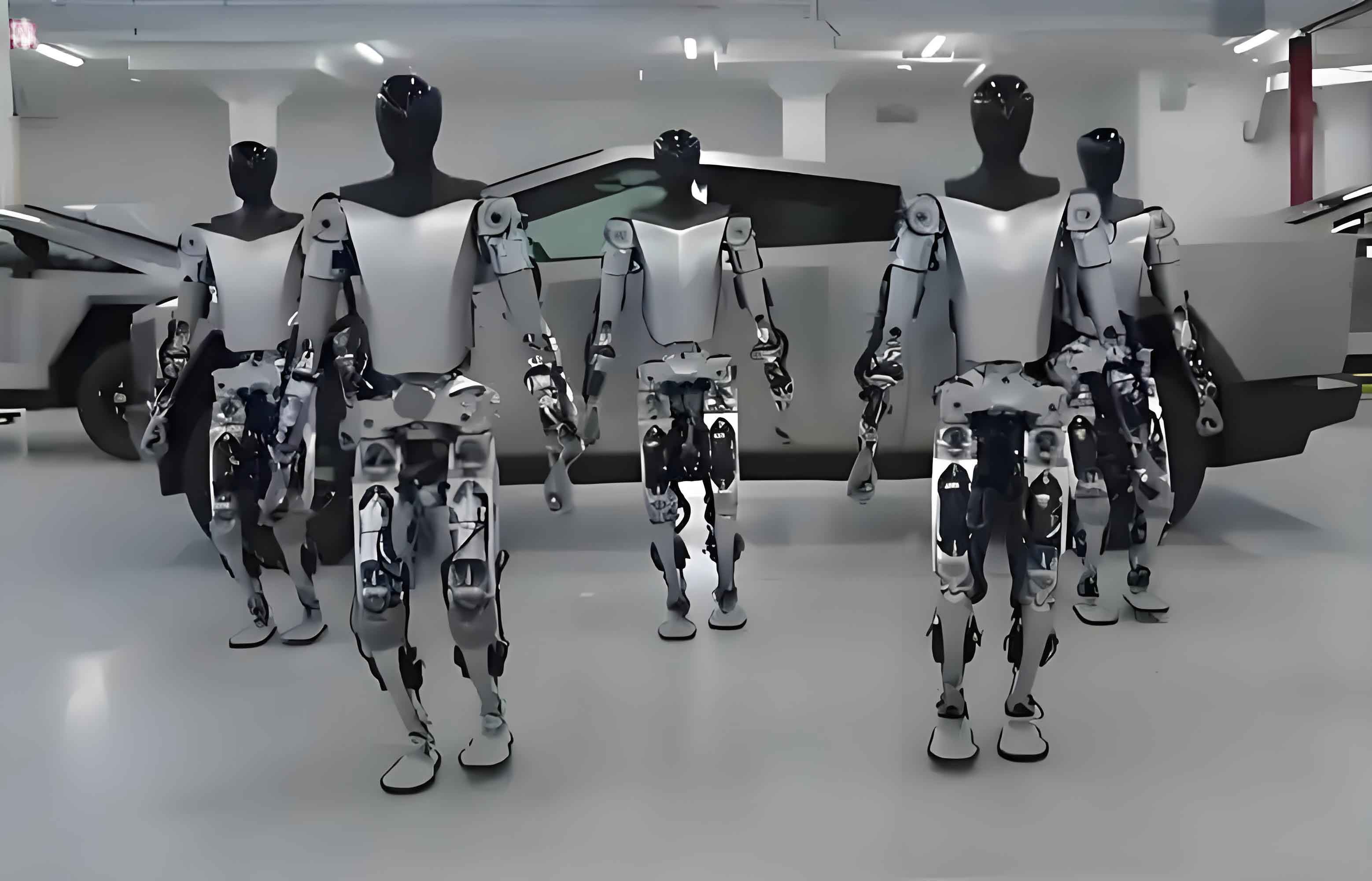

To realize the full potential of intelligent robot systems in clinical nursing, they must possess three core capabilities: environmental interaction, emotional engagement, and self-learning evolution. These capabilities form the foundation for transitioning from assistive devices to autonomous partners. First, environmental interaction involves perceiving and adapting to physical surroundings through multimodal sensors—such as vision, sound, and touch—that enable navigation, obstacle avoidance, and task execution. For example, a robot might use LiDAR and cameras to map a hospital room, ensuring safe movement while delivering supplies. The design of these robots, whether humanoid or quadrupedal, influences their adaptability; recent research integrates large language models (LLMs) as “brains” to coordinate bimanual tasks, like assisting with patient hygiene. Second, emotional engagement requires robots to recognize and respond to human emotions through natural language processing and affective computing. An intelligent robot could detect patient anxiety via vocal tone analysis and provide calming interventions or alert nurses. LLM-driven chatbots have shown promise in mental health support, and embedding such capabilities into physical robots could enhance patient-centered care. Third, self-learning evolution allows robots to improve over time through imitation learning and data accumulation. For instance, projects like Mobile Aloha demonstrate how robots learn household tasks from few demonstrations, while surgical robots achieve high autonomy in live animal trials. In nursing, this could involve robots refining bed-making or patient-transfer techniques based on feedback, ultimately reducing training costs and enhancing efficiency.

These core capabilities are interdependent, as summarized in Table 1. Environmental interaction relies on sensor-data fusion, emotional engagement on AI-driven empathy, and self-learning on reinforcement algorithms. Together, they enable intelligent robot systems to handle the unpredictability of clinical settings.

| Capability | Description | Example Technologies |

|---|---|---|

| Environmental Interaction | Perceiving and adapting to physical environments for safe navigation and task execution | LiDAR, cameras, LLM-based coordination |

| Emotional Engagement | Recognizing and responding to human emotions through interactive communication | Natural language processing, affective computing |

| Self-Learning Evolution | Improving performance autonomously via data-driven learning and imitation | Reinforcement learning, neural networks |

Building on these capabilities, I propose a five-layer conceptual framework to guide the development and deployment of intelligent robot systems in clinical nursing. This framework, illustrated in Figure 1, progresses from inner ethical safeguards to outer ecosystem integration, ensuring a holistic approach. The layers are: Core Safety and Ethics Layer, Basic Functionality Layer, Intelligent Collaboration Layer, Human-Robot Interaction Layer, and Ecological Expansion Layer. Each layer addresses critical aspects of robot implementation, from technical robustness to societal acceptance.

The Core Safety and Ethics Layer forms the foundation, emphasizing system reliability, data security, and ethical compliance. For instance, robots must incorporate redundancy mechanisms to handle failures and adhere to medical ethics—such as respecting patient autonomy—through predefined behavioral guidelines. This layer can be modeled using a risk-assessment formula: $$ R = P \times S $$ where \( R \) represents risk, \( P \) the probability of an adverse event, and \( S \) its severity. By minimizing \( R \) through design, intelligent robot systems can uphold safety standards. The Basic Functionality Layer encompasses perception, decision-making, and execution modules. Perception involves multimodal data acquisition (e.g., vital signs monitoring), decision-making uses AI algorithms for tasks like risk prediction, and execution involves physical actions via manipulators. A unified model for this layer is: $$ A_t = f(P_t, D_t) $$ where \( A_t \) is the action at time \( t \), \( P_t \) the perceptual input, and \( D_t \) the decision output. This closed-loop process enables real-time responses, such as adjusting patient position based on pressure ulcer risks.

The Intelligent Collaboration Layer focuses on system integration, adaptive learning, and multi-robot coordination. Here, robots act as edge nodes in a network, sharing data with hospital information systems and other devices. Collaborative algorithms, such as multi-agent reinforcement learning, optimize task allocation among robots. For example, in a emergency scenario, one intelligent robot might handle triage while another fetches supplies, with their actions coordinated by a central AI. The learning component can be expressed as: $$ Q(s,a) \leftarrow Q(s,a) + \alpha [r + \gamma \max_{a’} Q(s’,a’) – Q(s,a)] $$ where \( Q(s,a) \) is the value of action \( a \) in state \( s \), \( \alpha \) the learning rate, \( r \) the reward, and \( \gamma \) the discount factor. This Q-learning update allows robots to refine strategies through experience. The Human-Robot Interaction Layer prioritizes seamless communication and trust-building with patients and staff. It involves natural language interfaces, emotion recognition, and behavior adaptation based on user feedback. Data from interactions are logged for continuous improvement, ensuring that robots can handle diverse individual needs. Finally, the Ecological Expansion Layer addresses scalability, policy governance, and service optimization. Modular designs enable customization for different settings, while regulatory standards ensure long-term viability. This layer fosters a sustainable ecosystem where intelligent robot systems evolve with societal changes.

| Layer | Key Components | Implementation Examples |

|---|---|---|

| Core Safety and Ethics | Risk management, data privacy, ethical guidelines | Redundant systems, encryption, consent protocols |

| Basic Functionality | Perception, decision-making, execution | Sensor fusion, AI algorithms, robotic arms |

| Intelligent Collaboration | System integration, adaptive learning, multi-robot coordination | Edge computing, reinforcement learning, task allocation |

| Human-Robot Interaction | Communication, emotion recognition, trust mechanisms | Dialogue systems, affective computing, user feedback loops |

| Ecological Expansion | Modularity, policy compliance, service evolution | Interchangeable parts, regulatory frameworks, performance metrics |

The integration of intelligent robot systems into clinical nursing will inevitably reshape care delivery models. First, nursing services will transition toward automation and personalization. Robots taking over repetitive tasks—like rounds and disinfection—free nurses to focus on complex decision-making and emotional support. This shift enhances efficiency and reduces burnout, as shown in studies where robot assistance lowered workload by up to 30% in general wards. Second, patient-nurse relationships will be redefined through emotional interactions. An intelligent robot capable of empathy can provide companionship and mental health interventions, complementing human care. For instance, in dementia care, robots have elicited positive emotional responses, improving patient well-being. This fosters a holistic approach that addresses physiological, psychological, and social needs. Third, nursing roles will evolve from task-oriented to supervisory and coordinative. Nurses may become managers of robot fleets, analyzing data streams and intervening in critical cases. New positions, such as AI-nursing coordinators, will emerge, requiring skills in technology management and ethics. This transformation not only elevates the profession but also expands career pathways in healthcare.

However, the adoption of intelligent robot systems faces significant challenges. Technically, AI hallucinations and limited multimodal perception hinder reliability in complex environments. Solutions include curating high-quality training datasets and optimizing algorithms through clinical simulations. Ethically, data privacy and accountability arise as robots collect sensitive information. Techniques like federated learning, where $$ \min_{\theta} \sum_{k=1}^{K} \frac{n_k}{n} F_k(\theta) $$ distributes model training across devices without centralizing data, can mitigate risks. Additionally, clear regulations on robot autonomy levels—defining which tasks require human oversight—are essential. In clinical practice, resistance to change and integration issues may slow adoption. Nurses must actively participate in robot design and workflow redesign, ensuring that these systems enhance rather than disrupt care. Establishing evaluation metrics for robot performance and human-robot collaboration will be crucial for standardization.

| Challenge Category | Specific Issues | Proposed Solutions |

|---|---|---|

| Technical | AI inaccuracies, environmental adaptability | Enhanced datasets, algorithm tuning, simulated testing |

| Ethical and Safety | Data privacy, ethical decision-making | Federated learning, ethical frameworks, transparency |

| Clinical Application | Workflow integration, user acceptance | Nurse-led design, training programs, performance standards |

In conclusion, the era of embodied intelligence is poised to revolutionize clinical nursing through intelligent robot systems. By harnessing environmental interaction, emotional engagement, and self-learning evolution, these robots can transition from assistants to integral team members. The five-layer framework provides a roadmap for safe and effective deployment, while the anticipated shifts in care models underscore the need for proactive adaptation. As we move forward, collaboration among technologists, clinicians, and policymakers will be key to realizing the full potential of intelligent robot solutions. I believe that through continuous innovation and ethical stewardship, nursing can embrace a future where technology and compassion coalesce, ultimately enhancing patient outcomes and professional satisfaction.