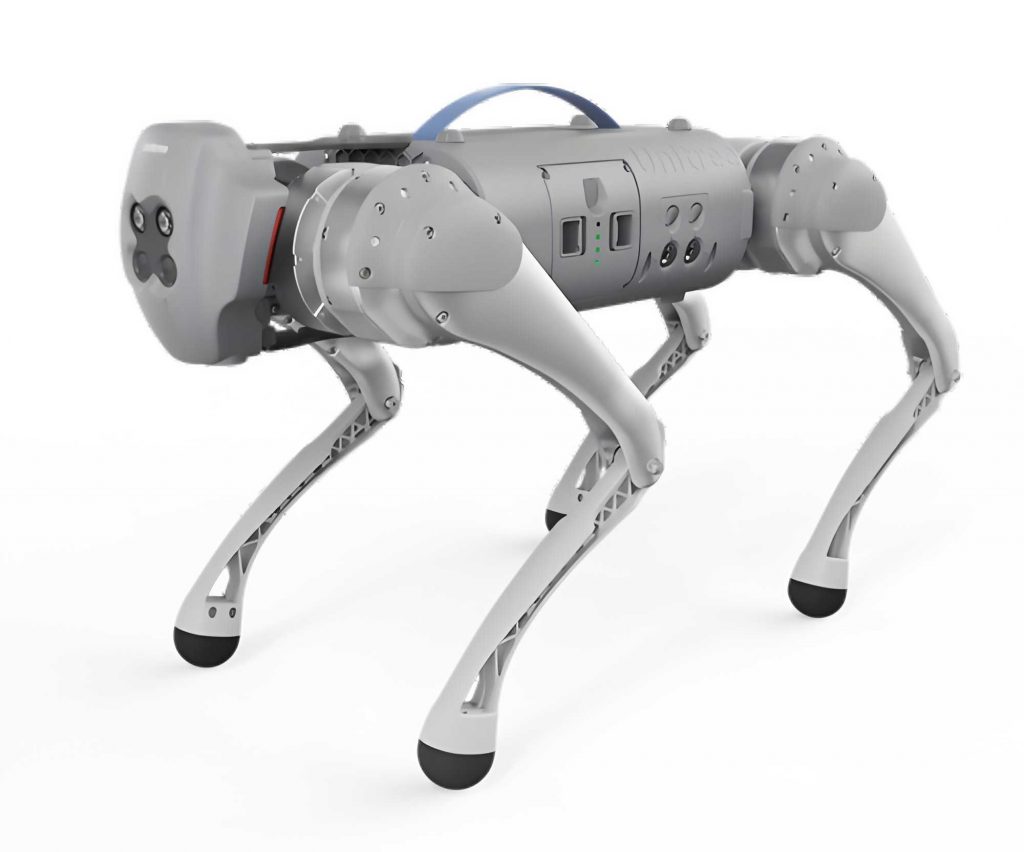

In recent years, the development of quadruped robots, often referred to as robot dogs, has garnered significant attention due to their potential applications in various fields such as search and rescue, surveillance, and industrial automation. These quadruped robots exhibit remarkable agility and adaptability in navigating complex terrains, making them superior to wheeled or tracked robots in many scenarios. However, achieving precise locomotion speed tracking control remains a critical challenge, as inaccuracies can lead to instability, inefficient energy consumption, or failure in task execution. Traditional control methods, including model-based approaches and classical reinforcement learning, often struggle with generalization across diverse environments and real-time adaptation to dynamic conditions. This paper addresses these limitations by proposing a novel control algorithm that integrates shaping rewards into a policy modulating trajectory generator (PMTG) framework, enhancing the accuracy of locomotion velocity tracking for quadruped robots.

The core of our approach lies in leveraging deep reinforcement learning (DRL) to optimize the control policies of quadruped robots. DRL has shown promise in learning complex behaviors from high-dimensional sensory inputs, but it often requires extensive training data and may suffer from slow convergence or suboptimal performance in locomotion tasks. To overcome these issues, we introduce a shaping reward mechanism that guides the learning process by evaluating the error between reference and target locomotion speeds. This is combined with an improved trajectory generator based on Hopf oscillators, which generates reference gait motions with computable locomotion speeds. By modulating these trajectories through a policy network, our method ensures that the robot dog can accurately track desired velocities while maintaining stability and efficiency. The integration of mirror symmetry loss further enhances tracking precision and reduces locomotion yaw, contributing to more robust and natural movements for the quadruped robot.

In this work, we first construct a parameterized trajectory generator (TG) using Hopf oscillators to produce reference gait motions. The Hopf oscillator is a nonlinear dynamical system that can generate stable limit cycles, making it suitable for modeling rhythmic movements such as walking or running in a quadruped robot. The dynamics of the Hopf oscillator are described by the following equations:

$$ \dot{x} = \alpha (\mu – r^2)x – \omega z $$

$$ \dot{z} = \alpha (\mu – r^2)z + \omega x $$

where \( r = \sqrt{x^2 + z^2} \), \( x = r \cos \phi \), and \( z = r \sin \phi \), with \( \phi \) representing the phase of the oscillator. Here, \( A = \sqrt{\mu} \) denotes the amplitude of the limit cycle, \( \omega \) is the natural frequency, and \( \alpha \) controls the convergence rate to the limit cycle. For a quadruped robot, we map the oscillator outputs to joint angle signals for the legs, enabling the generation of periodic gait patterns. For instance, the signals for the thigh and knee joints can be derived as:

$$ S_{\text{thigh}} = x $$

$$ S_{\text{knee}} = \begin{cases} 0, & z > 0 \\ -\frac{A_k}{A_h} z, & z < 0 \end{cases} $$

where \( A_h \) and \( A_k \) are the amplitudes for the thigh and knee joints, respectively, and \( \mu = A_h^2 \). To adapt the oscillator for different gait phases and locomotion speeds, we parameterize the frequency \( \omega \) as:

$$ \omega = \frac{\pi}{\beta T (e^{-a z} + 1)} + \frac{\pi}{(1 – \beta) T (e^{a z} + 1)} = \frac{\omega_{\text{st}}}{e^{-a z} + 1} + \frac{\omega_{\text{sw}}}{e^{a z} + 1} $$

where \( \omega_{\text{st}} = \frac{\pi}{\beta T} \) is the stance phase frequency, \( \omega_{\text{sw}} = \frac{\pi}{(1 – \beta) T} \) is the swing phase frequency, \( T \) is the gait period, \( \beta \) is the duty factor, and \( a \) regulates the transition smoothness between phases. This parameterization allows the trajectory generator to produce reference motions with computable locomotion speeds, which is crucial for accurate tracking in a robot dog.

The improved PMTG algorithm modulates these reference trajectories through a policy network trained with deep reinforcement learning. The policy takes as input the robot’s state, including the current locomotion velocity, reference velocity, phase signals, orientation, and linear and angular velocities. The action space consists of joint angles and TG parameters, such as amplitude and period. To enhance learning efficiency, we employ a proximal policy optimization (PPO) algorithm, which optimizes the policy by maximizing the expected cumulative reward. The objective function for PPO is given by:

$$ L(\theta) = \mathbb{E}_{\pi_\theta} \left[ \min\left( \rho_t \hat{A}(s_t, a_t), \text{clip}(\rho_t, 1 – \epsilon, 1 + \epsilon) \hat{A}(s_t, a_t) \right) \right] $$

where \( \rho_t = \frac{\pi_\theta(a_t | s_t)}{\pi_{\theta_{\text{old}}}(a_t | s_t)} \) is the probability ratio, \( \hat{A}(s_t, a_t) \) is the advantage function estimated using generalized advantage estimation (GAE), and \( \epsilon \) is a clipping parameter that constraints policy updates to prevent large deviations. Additionally, we incorporate a value function \( V_\phi(s) \) trained to minimize the mean-squared error with the returns:

$$ L(\phi) = \frac{1}{2} \left( V_\phi(s) – R(s, a) \right)^2 $$

The key innovation in our method is the introduction of a shaping reward that facilitates locomotion speed tracking. The reward function is designed to penalize deviations from the target velocity while encouraging the generation of reference motions that align with the desired speed. The shaping reward component is defined as:

$$ R_{\text{vel}} = (1 – \| v_{\text{ref}} – v_R \|) \cdot \exp(-C \cdot \| v_R – v_T \|) $$

where \( v_{\text{ref}} \) is the reference velocity computed from the TG, \( v_R \) is the robot’s current velocity, \( v_T \) is the target velocity, and \( C = \frac{1}{2 \cdot \| v_R \|} \) is a scaling factor. This reward not only reduces the error between the current and target velocities but also ensures that the reference velocity from the TG is consistent with the target, thereby improving the overall tracking performance for the quadruped robot. The complete reward function includes additional terms for orientation control, foot contact, and smoothness, expressed as:

$$ R = R_{\text{vel}} + 0.5 \cdot R_{\text{ori}} + 0.2 \cdot R_{\text{motion}} + R_{\text{aux}} $$

where \( R_{\text{ori}} = \exp(-24 \cdot \| \omega_z – \omega_{\text{cmd}_z} \|) \) penalizes deviations in yaw rate, \( R_{\text{motion}} = \exp(-\sum_{i=0}^{3} \| I_{t_i, \text{phase}} – I_{t_i, \text{contact}} \|) \) encourages proper foot contact sequencing, and \( R_{\text{aux}} = -0.001 \cdot R_{\text{smooth}} – 0.2 \cdot R_{\text{balance}} – 0.00002 \cdot R_{\text{torque}} \) includes penalties for joint smoothness, balance, and torque usage. Here, \( R_{\text{smooth}} = \| a_{t-2} – 2 \cdot a_{t-1} + a_t \| \), \( R_{\text{balance}} = \| v_{\text{roll}} \| + \| v_{\text{pitch}} \| \), and \( R_{\text{torque}} = \| \tau \| \).

To further improve the robustness of the quadruped robot, we integrate a mirror symmetry loss into the policy optimization. This loss encourages symmetric gait patterns, which are essential for stable locomotion in a robot dog. The symmetry loss is defined as:

$$ L_{\text{sym}}(\theta) = \sum_{i} \| \pi_\theta(s_i) – \psi_a (\pi_\theta (\psi_o (s_i))) \| $$

where \( \psi_o(s) \) and \( \psi_a(\pi_\theta(s)) \) are functions that apply mirror transformations to the observations and actions, respectively. This loss is added to the PPO objective function with a weighting factor \( \alpha = 0.001 \), resulting in the combined loss:

$$ L(\theta) = L_{\text{PPO}}(\theta) + \alpha L_{\text{sym}}(\theta) $$

This approach promotes energy-efficient and natural movements by leveraging the inherent symmetry of quadrupedal locomotion.

We evaluated our method through simulation experiments using the RaiSim environment. The training parameters for the PPO algorithm are summarized in the table below:

| Training Parameter | Value |

|---|---|

| Initial Learning Rate \( l_r \) | 5e-4 |

| GAE Parameter \( \gamma \) | 0.996 |

| GAE Parameter \( \lambda \) | 0.95 |

| PPO Clipping Parameter \( \epsilon \) | 0.2 |

| Number of Episodes | 800 |

| Steps per Episode | 1000 |

The learning rate is adaptively adjusted based on the Kullback-Leibler divergence to maintain stable training. The observation space includes the robot’s velocity, reference velocity, phase signals, height, orientation, and linear and angular velocities, while the action space comprises joint angles and TG parameters. The TG parameters are scaled to practical ranges: the amplitude \( A_h’ = 0.2 \cdot |A_h| + 0.1 \) for \( A_h \in [-1, 1] \), resulting in \( A_h’ \in [0.1, 0.3] \), and the period \( T’ = 0.6 \cdot |T| + 0.4 \) for \( T \in [-1, 1] \), yielding \( T’ \in [0.4, 1.0] \). Using the relationship \( v_{\text{ref}} = \frac{4 l \cos \theta_0 \sin A_h’}{\beta T’} \), where \( l \) is the leg length and \( \theta_0 \) is the nominal joint angle, the reference velocity ranges from 0.175 m/s to 1.30 m/s, covering the target velocities of 0.2 m/s to 1.2 m/s for the quadruped robot.

The performance of our improved PMTG algorithm was compared against baseline methods, including the original PMTG, PMTG with shaping reward only, and PMTG with symmetry loss only. The results, averaged over 5000 steps (50 seconds) of simulation, are presented in the following table:

| Method | Tracking Error (m/s) | Yaw Deviation |

|---|---|---|

| PMTG + Shaping Reward + Symmetry Loss | 0.041 | 0.007 |

| PMTG + Shaping Reward | 0.091 | 0.015 |

| PMTG + Symmetry Loss | 0.052 | 0.008 |

| Original PMTG | 0.068 | 0.010 |

As shown, our full method (PMTG with shaping reward and symmetry loss) achieves the lowest tracking error and yaw deviation, demonstrating its superiority in locomotion speed tracking for the quadruped robot. The shaping reward alone reduces error compared to the original PMTG, but the combination with symmetry loss yields the best performance, highlighting the importance of both components in enhancing control accuracy.

Further analysis of the trajectory generator parameters reveals that the improved PMTG algorithm effectively adjusts the TG parameters to match the target locomotion speeds. For example, the amplitude \( A_h \) and period \( T \) converge to values that produce reference velocities close to the target, as illustrated by the following equations derived from the Hopf oscillator model:

$$ v_{\text{ref}} = \frac{S}{T} = \frac{8 l \cos \theta_0 \sin A_h}{\beta T} $$

where \( S \) is the stride length. This computable relationship allows the policy to learn appropriate TG parameters, facilitating accurate velocity tracking. In contrast, without the shaping reward, the TG parameters may not align well with the target, leading to larger errors. The integration of mirror symmetry also contributes to stable foot contact sequences and reduced roll, pitch, and yaw angles during locomotion, as observed in the simulation results. For instance, the roll, pitch, and yaw angles remain within ±0.012 radians across different target velocities, indicating high stability for the robot dog.

In conclusion, our proposed method significantly improves locomotion speed tracking control for quadruped robots by incorporating shaping rewards and mirror symmetry into the PMTG framework. The use of Hopf oscillators enables the generation of reference gait motions with computable velocities, while the shaping reward guides the policy to minimize tracking errors. The symmetry loss promotes natural and energy-efficient movements, enhancing overall robustness. Simulation experiments confirm that our approach outperforms existing methods in terms of tracking accuracy and stability, making it a promising solution for real-world applications of robot dogs. Future work will focus on extending this method to more dynamic environments and real-hardware implementation to validate its practical efficacy.

The advancements in control algorithms for quadruped robots, such as the one presented here, pave the way for more autonomous and reliable robotic systems. By leveraging deep reinforcement learning and biomechanically inspired models, we can overcome the limitations of traditional control strategies and achieve superior performance in complex tasks. The continued evolution of robot dog technology will undoubtedly lead to broader adoption in various sectors, from disaster response to personal assistance, underscoring the importance of innovative control methodologies like ours.